The Best Way to Lose Money With Deepseek

페이지 정보

작성자 Starla Potter 작성일25-03-10 06:56 조회7회 댓글0건관련링크

본문

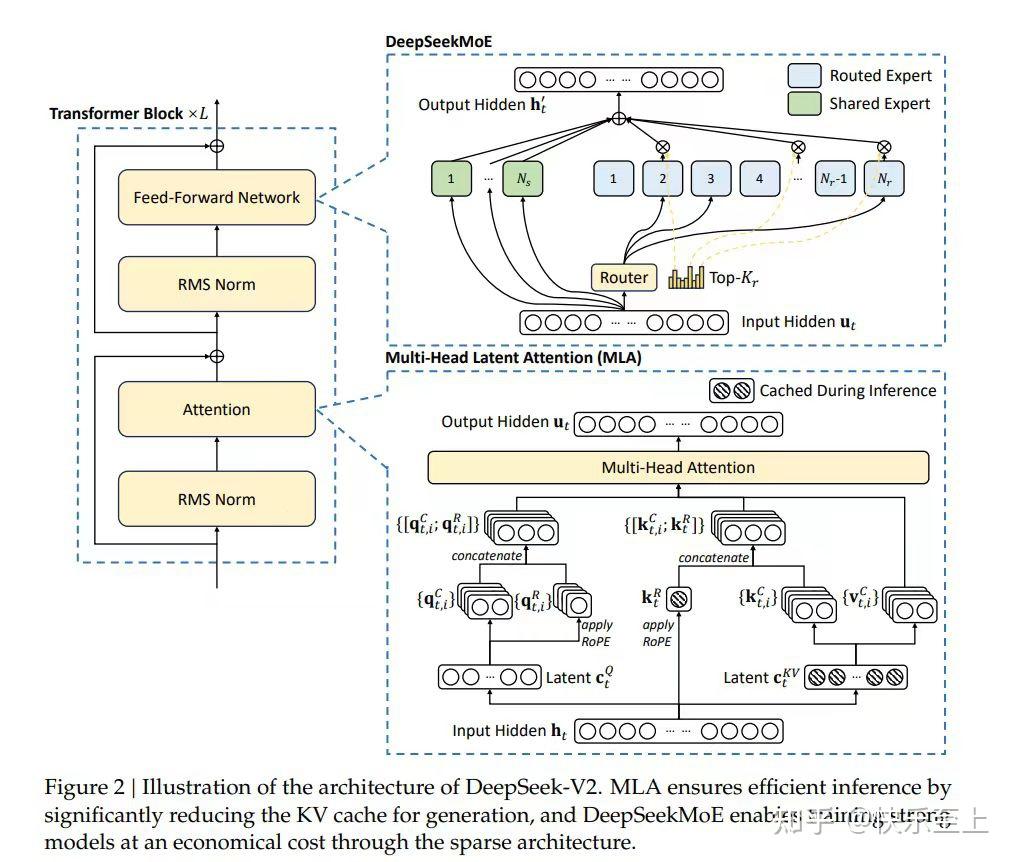

White House Press Secretary Karoline Leavitt recently confirmed that the National Security Council is investigating whether DeepSeek poses a potential nationwide safety threat. Bookmark the security blog to keep up with our expert protection on security issues. And if future variations of this are fairly dangerous, it means that it’s going to be very laborious to maintain that contained to 1 country or one set of firms. Ultimately, AI firms within the US and different democracies should have better fashions than these in China if we need to prevail. Whether it is leveraging a Mixture of Experts method, focusing on code technology, or excelling in language-particular tasks, DeepSeek models offer slicing-edge options for various AI challenges. This mannequin adopts a Mixture of Experts approach to scale up parameter depend successfully. This modification prompts the mannequin to acknowledge the top of a sequence otherwise, thereby facilitating code completion duties. Fix: Use stricter prompts (e.g., "Answer utilizing only the offered context") or upgrade to larger fashions like 32B . This strategy enables DeepSeek V3 to attain efficiency ranges comparable to dense models with the identical variety of total parameters, despite activating solely a fraction of them.

This open-weight giant language model from China activates a fraction of its huge parameters during processing, leveraging the refined Mixture of Experts (MoE) structure for optimization. According to industry specialists, the company trained its models for around $6 million, a fraction of the hundreds of millions spent by OpenAI. Since the company was created in 2023, DeepSeek has launched a collection of generative AI fashions. On April 28, 2023, ChatGPT was restored in Italy and OpenAI said it had "addressed or clarified" the problems raised by the Garante. Enter DeepSeek R1-a Free Deepseek Online chat, open-source language model that rivals GPT-four and Claude 3.5 in reasoning and coding tasks . For instance, its 32B parameter variant outperforms OpenAI’s o1-mini in code generation benchmarks, and its 70B mannequin matches Claude 3.5 Sonnet in advanced duties . This is right should you occasionally need to check outputs with fashions like GPT-4 or Claude but want DeepSeek R1 as your default. DeepSeek persistently adheres to the route of open-supply fashions with longtermism, aiming to steadily approach the ultimate aim of AGI (Artificial General Intelligence). Introducing the groundbreaking DeepSeek-V3 AI, a monumental advancement that has set a brand new customary within the realm of synthetic intelligence.

This open-weight giant language model from China activates a fraction of its huge parameters during processing, leveraging the refined Mixture of Experts (MoE) structure for optimization. According to industry specialists, the company trained its models for around $6 million, a fraction of the hundreds of millions spent by OpenAI. Since the company was created in 2023, DeepSeek has launched a collection of generative AI fashions. On April 28, 2023, ChatGPT was restored in Italy and OpenAI said it had "addressed or clarified" the problems raised by the Garante. Enter DeepSeek R1-a Free Deepseek Online chat, open-source language model that rivals GPT-four and Claude 3.5 in reasoning and coding tasks . For instance, its 32B parameter variant outperforms OpenAI’s o1-mini in code generation benchmarks, and its 70B mannequin matches Claude 3.5 Sonnet in advanced duties . This is right should you occasionally need to check outputs with fashions like GPT-4 or Claude but want DeepSeek R1 as your default. DeepSeek persistently adheres to the route of open-supply fashions with longtermism, aiming to steadily approach the ultimate aim of AGI (Artificial General Intelligence). Introducing the groundbreaking DeepSeek-V3 AI, a monumental advancement that has set a brand new customary within the realm of synthetic intelligence.

Let's delve into the features and structure that make DeepSeek V3 a pioneering model in the sphere of synthetic intelligence. An evolution from the earlier Llama 2 mannequin to the enhanced Llama 3 demonstrates the dedication of DeepSeek V3 to steady improvement and innovation within the AI panorama. As customers have interaction with this advanced AI model, they have the opportunity to unlock new possibilities, drive innovation, and contribute to the continuous evolution of AI technologies. The evolution to this model showcases improvements which have elevated the capabilities of the DeepSeek AI mannequin. Users can expect improved model performance and heightened capabilities as a result of rigorous enhancements included into this latest version. The Chinese engineers had restricted assets, and that they had to find creative solutions." These workarounds seem to have included limiting the variety of calculations that DeepSeek-R1 carries out relative to comparable fashions, and utilizing the chips that were available to a Chinese company in ways in which maximize their capabilities. I want a workflow so simple as "brew install avsm/ocaml/srcsetter" and have it set up a working binary version of my CLI utility. The export controls and whether or not or not they're gonna deliver the form of results that whether the China hawks say they may or people who criticize them will not, I don't think we really have an answer one way or the other but.

In 2025, Nvidia research scientist Jim Fan referred to DeepSeek because the 'greatest darkish horse' in this area, underscoring its important influence on remodeling the way in which AI models are educated. The influence of DeepSeek in AI coaching is profound, difficult conventional methodologies and paving the best way for more environment friendly and powerful AI techniques. The chatbot became extra extensively accessible when it appeared on Apple and Google app stores early this year. How can we consider a system that uses multiple AI agent to ensure that it functions accurately? Let's discover two key fashions: DeepSeekMoE, which makes use of a Mixture of Experts strategy, and DeepSeek-Coder and DeepSeek-LLM, designed for specific functions. 2. Navigate to API Keys and create a new key. 2. Select "OpenAI-Compatible" as the API provider. Trained on an enormous dataset comprising roughly 87% code, 10% English code-related pure language, and 3% Chinese pure language, DeepSeek-Coder undergoes rigorous knowledge high quality filtering to ensure precision and accuracy in its coding capabilities. DeepSeek Version 3 represents a shift in the AI panorama with its superior capabilities. DeepSeek Version three distinguishes itself by its distinctive incorporation of the Mixture of Experts (MoE) structure, as highlighted in a technical deep dive on Medium.

If you treasured this article so you would like to acquire more info concerning Deep Seek please visit the page.

댓글목록

등록된 댓글이 없습니다.