DeepSeek aI Launches Multimodal "Janus-Pro-7B" Model with Im…

페이지 정보

작성자 Nadia Steinman 작성일25-03-09 16:29 조회6회 댓글0건관련링크

본문

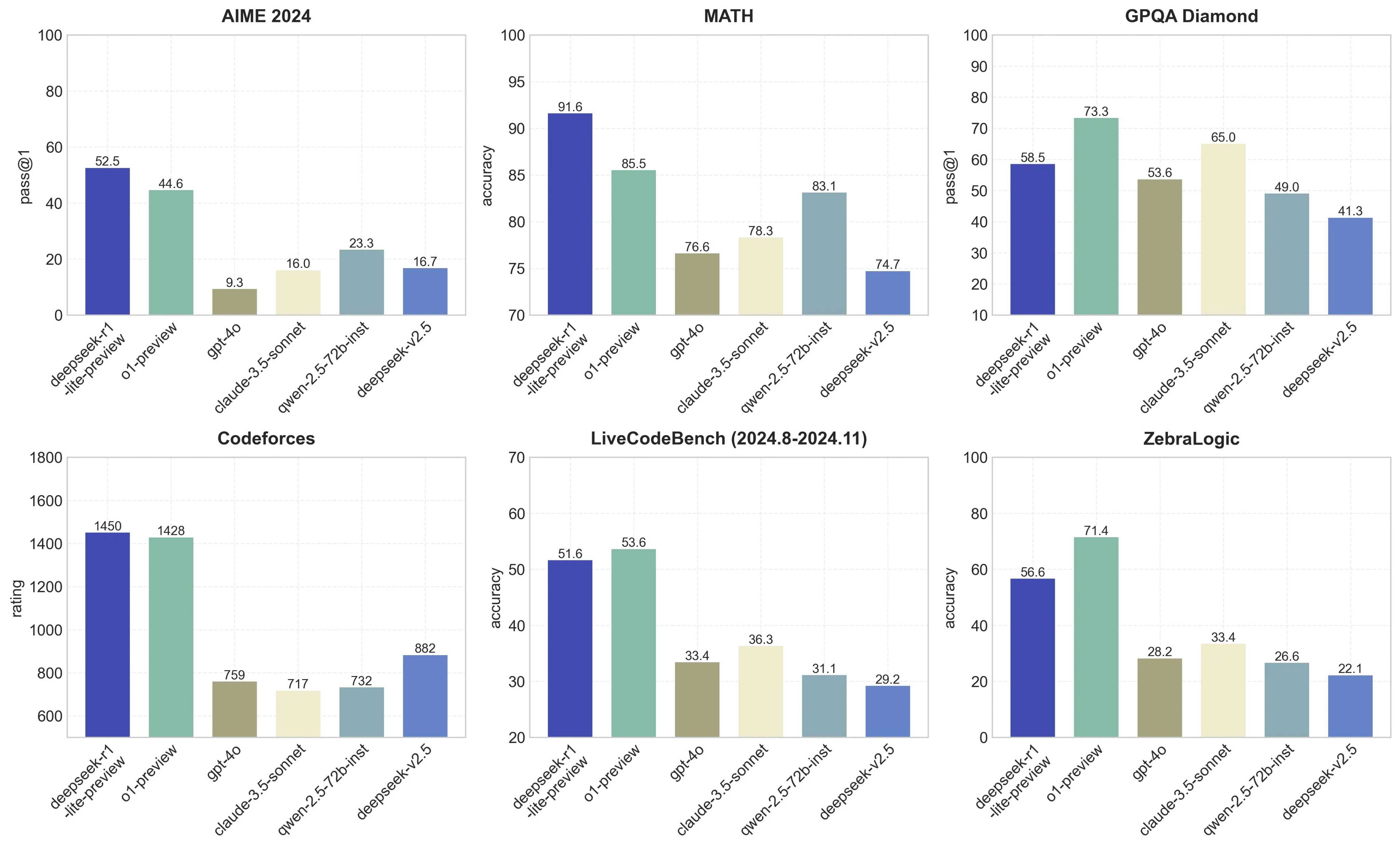

Open Models. On this mission, we used various proprietary frontier LLMs, similar to GPT-4o and Sonnet, but we also explored utilizing open models like DeepSeek and Llama-3. DeepSeek Coder V2 has demonstrated exceptional performance across various benchmarks, typically surpassing closed-source models like GPT-4 Turbo, Claude three Opus, and Gemini 1.5 Pro in coding and math-particular duties. For instance that is much less steep than the original GPT-4 to Claude 3.5 Sonnet inference worth differential (10x), and 3.5 Sonnet is a greater model than GPT-4. This update introduces compressed latent vectors to boost performance and reduce reminiscence utilization throughout inference. To make sure unbiased and thorough performance assessments, DeepSeek AI designed new downside sets, such as the Hungarian National High-School Exam and Google’s instruction following the analysis dataset. 2. Train the mannequin using your dataset. Fix: Use stricter prompts (e.g., "Answer utilizing only the offered context") or improve to larger models like 32B . However, users must be aware of the moral considerations that include utilizing such a powerful and uncensored model. However, DeepSeek-R1-Zero encounters challenges akin to endless repetition, poor readability, and language mixing. This extensive language assist makes DeepSeek Coder V2 a versatile instrument for builders working across various platforms and applied sciences.

Open Models. On this mission, we used various proprietary frontier LLMs, similar to GPT-4o and Sonnet, but we also explored utilizing open models like DeepSeek and Llama-3. DeepSeek Coder V2 has demonstrated exceptional performance across various benchmarks, typically surpassing closed-source models like GPT-4 Turbo, Claude three Opus, and Gemini 1.5 Pro in coding and math-particular duties. For instance that is much less steep than the original GPT-4 to Claude 3.5 Sonnet inference worth differential (10x), and 3.5 Sonnet is a greater model than GPT-4. This update introduces compressed latent vectors to boost performance and reduce reminiscence utilization throughout inference. To make sure unbiased and thorough performance assessments, DeepSeek AI designed new downside sets, such as the Hungarian National High-School Exam and Google’s instruction following the analysis dataset. 2. Train the mannequin using your dataset. Fix: Use stricter prompts (e.g., "Answer utilizing only the offered context") or improve to larger models like 32B . However, users must be aware of the moral considerations that include utilizing such a powerful and uncensored model. However, DeepSeek-R1-Zero encounters challenges akin to endless repetition, poor readability, and language mixing. This extensive language assist makes DeepSeek Coder V2 a versatile instrument for builders working across various platforms and applied sciences.

DeepSeek is a robust AI software designed to help with varied tasks, from programming help to data evaluation. A general use mannequin that combines advanced analytics capabilities with an enormous 13 billion parameter count, enabling it to perform in-depth information evaluation and support complicated choice-making processes. Whether you’re constructing simple models or deploying superior AI solutions, DeepSeek presents the capabilities that you must succeed. With its spectacular capabilities and efficiency, DeepSeek Coder V2 is poised to turn into a sport-changer for builders, researchers, and AI fanatics alike. Despite its glorious efficiency, DeepSeek Ai Chat-V3 requires solely 2.788M H800 GPU hours for its full training. Fix: Always present full file paths (e.g., /src/components/Login.jsx) as a substitute of imprecise references . You get GPT-4-stage smarts without the associated fee, full control over privacy, and a workflow that appears like pairing with a senior developer. For Code: Include explicit directions like "Use Python 3.11 and sort hints" . An AI observer Rowan Cheung indicated that the new model outperforms opponents OpenAI’s DALL-E 3 and Stability AI’s Stable Diffusion on some benchmarks like GenEval and DPG-Bench. The mannequin supports an impressive 338 programming languages, a major increase from the 86 languages supported by its predecessor.

DeepSeek is a robust AI software designed to help with varied tasks, from programming help to data evaluation. A general use mannequin that combines advanced analytics capabilities with an enormous 13 billion parameter count, enabling it to perform in-depth information evaluation and support complicated choice-making processes. Whether you’re constructing simple models or deploying superior AI solutions, DeepSeek presents the capabilities that you must succeed. With its spectacular capabilities and efficiency, DeepSeek Coder V2 is poised to turn into a sport-changer for builders, researchers, and AI fanatics alike. Despite its glorious efficiency, DeepSeek Ai Chat-V3 requires solely 2.788M H800 GPU hours for its full training. Fix: Always present full file paths (e.g., /src/components/Login.jsx) as a substitute of imprecise references . You get GPT-4-stage smarts without the associated fee, full control over privacy, and a workflow that appears like pairing with a senior developer. For Code: Include explicit directions like "Use Python 3.11 and sort hints" . An AI observer Rowan Cheung indicated that the new model outperforms opponents OpenAI’s DALL-E 3 and Stability AI’s Stable Diffusion on some benchmarks like GenEval and DPG-Bench. The mannequin supports an impressive 338 programming languages, a major increase from the 86 languages supported by its predecessor.

其支持的编程语言从 86 种扩展至 338 种,覆盖主流及小众语言,适应多样化开发需求。 Optimize your model’s efficiency by fantastic-tuning hyperparameters. This vital improvement highlights the efficacy of our RL algorithm in optimizing the model’s performance over time. Monitor Performance: Track latency and accuracy over time . Utilize pre-educated fashions to save time and assets. As generative AI enters its second year, the dialog around giant models is shifting from consensus to differentiation, with the controversy centered on belief versus skepticism. By making its models and training data publicly available, the company encourages thorough scrutiny, allowing the neighborhood to establish and tackle potential biases and moral points. Regular testing of each new app version helps enterprises and businesses determine and deal with security and privacy risks that violate coverage or exceed an acceptable stage of threat. To address this problem, we randomly split a sure proportion of such combined tokens during coaching, which exposes the mannequin to a wider array of special cases and mitigates this bias. Collect, clean, and preprocess your information to make sure it’s ready for model training.

DeepSeek Coder V2 is the result of an revolutionary coaching course of that builds upon the success of its predecessors. Critically, DeepSeekMoE additionally introduced new approaches to load-balancing and routing throughout coaching; historically MoE elevated communications overhead in training in alternate for efficient inference, but DeepSeek’s method made training extra efficient as effectively. Some critics argue that DeepSeek has not introduced basically new strategies however has merely refined current ones. For those who desire a more interactive experience, DeepSeek affords an internet-based mostly chat interface where you'll be able to work together with DeepSeek Coder V2 straight. DeepSeek is a versatile and powerful AI instrument that can significantly improve your projects. This stage of mathematical reasoning capability makes DeepSeek Coder V2 a useful instrument for college kids, educators, and researchers in mathematics and associated fields. DeepSeek Coder V2 employs a Mixture-of-Experts (MoE) architecture, which permits for efficient scaling of mannequin capability while protecting computational requirements manageable.

Should you loved this information and you want to receive details about DeepSeek Chat please visit our own web site.

댓글목록

등록된 댓글이 없습니다.