You Make These Deepseek Mistakes?

페이지 정보

작성자 Berniece 작성일25-03-09 05:54 조회10회 댓글0건관련링크

본문

The attention of Sauron has now descended upon DeepSeek. Now we install and configure the NVIDIA Container Toolkit by following these instructions. Note again that x.x.x.x is the IP of your machine hosting the ollama docker container. Next Download and install VS Code on your developer machine. Now we need the Continue VS Code extension. Seek advice from the Continue VS Code page for details on how to use the extension. Note you possibly can toggle tab code completion off/on by clicking on the proceed text in the lower right standing bar. Using pre-trained fashions like DeepSeek can pace up growth, but nice-tuning and customization nonetheless require time. Also, it appears to be like like the competition is catching up anyway. Forbes reported that Nvidia's market value "fell by about $590 billion Monday, rose by roughly $260 billion Tuesday and dropped $160 billion Wednesday morning." Other tech giants, like Oracle, Microsoft, Alphabet (Google's guardian company) and ASML (a Dutch chip gear maker) also faced notable losses. Also observe that if the mannequin is simply too gradual, you might wish to attempt a smaller model like "DeepSeek Ai Chat-coder:newest".

The attention of Sauron has now descended upon DeepSeek. Now we install and configure the NVIDIA Container Toolkit by following these instructions. Note again that x.x.x.x is the IP of your machine hosting the ollama docker container. Next Download and install VS Code on your developer machine. Now we need the Continue VS Code extension. Seek advice from the Continue VS Code page for details on how to use the extension. Note you possibly can toggle tab code completion off/on by clicking on the proceed text in the lower right standing bar. Using pre-trained fashions like DeepSeek can pace up growth, but nice-tuning and customization nonetheless require time. Also, it appears to be like like the competition is catching up anyway. Forbes reported that Nvidia's market value "fell by about $590 billion Monday, rose by roughly $260 billion Tuesday and dropped $160 billion Wednesday morning." Other tech giants, like Oracle, Microsoft, Alphabet (Google's guardian company) and ASML (a Dutch chip gear maker) also faced notable losses. Also observe that if the mannequin is simply too gradual, you might wish to attempt a smaller model like "DeepSeek Ai Chat-coder:newest".

If you're in search of something price-efficient, quick, and great for technical tasks, DeepSeek may be the solution to go. But after looking by means of the WhatsApp documentation and Indian Tech Videos (sure, all of us did look on the Indian IT Tutorials), it wasn't really much of a different from Slack. Look within the unsupported listing if your driver model is older. Note it is best to select the NVIDIA Docker picture that matches your CUDA driver model. Follow the instructions to put in Docker on Ubuntu. It's possible you'll need to have a play round with this one. It's good to play around with new models, get their really feel; Understand them better. We further conduct supervised wonderful-tuning (SFT) and Direct Preference Optimization (DPO) on DeepSeek LLM Base fashions, ensuing in the creation of DeepSeek Chat models. Although a lot easier by connecting the WhatsApp Chat API with OPENAI. I pull the DeepSeek Coder model and use the Ollama API service to create a immediate and get the generated response. Medical employees (additionally generated by way of LLMs) work at totally different elements of the hospital taking on totally different roles (e.g, radiology, dermatology, inside drugs, and so on). Second, LLMs have goldfish-sized working reminiscence.

If you're in search of something price-efficient, quick, and great for technical tasks, DeepSeek may be the solution to go. But after looking by means of the WhatsApp documentation and Indian Tech Videos (sure, all of us did look on the Indian IT Tutorials), it wasn't really much of a different from Slack. Look within the unsupported listing if your driver model is older. Note it is best to select the NVIDIA Docker picture that matches your CUDA driver model. Follow the instructions to put in Docker on Ubuntu. It's possible you'll need to have a play round with this one. It's good to play around with new models, get their really feel; Understand them better. We further conduct supervised wonderful-tuning (SFT) and Direct Preference Optimization (DPO) on DeepSeek LLM Base fashions, ensuing in the creation of DeepSeek Chat models. Although a lot easier by connecting the WhatsApp Chat API with OPENAI. I pull the DeepSeek Coder model and use the Ollama API service to create a immediate and get the generated response. Medical employees (additionally generated by way of LLMs) work at totally different elements of the hospital taking on totally different roles (e.g, radiology, dermatology, inside drugs, and so on). Second, LLMs have goldfish-sized working reminiscence.

These companies have rushed to launch DeepSeek-powered models, facilitating AI integration without hefty infrastructure investments. We compare the judgment capacity of DeepSeek-V3 with state-of-the-art fashions, particularly GPT-4o and Claude-3.5. The company unveiled a mixture of open-source and proprietary models, alongside updates to its cloud infrastructure. The corporate says the DeepSeek-V3 model price roughly $5.6 million to train utilizing Nvidia’s H800 chips. This reward mannequin was then used to practice Instruct using Group Relative Policy Optimization (GRPO) on a dataset of 144K math questions "associated to GSM8K and MATH". Now configure Continue by opening the command palette (you possibly can select "View" from the menu then "Command Palette" if you don't know the keyboard shortcut). Then I, as a developer, wished to challenge myself to create the same related bot. The above ROC Curve shows the identical findings, with a transparent split in classification accuracy when we evaluate token lengths above and beneath 300 tokens.

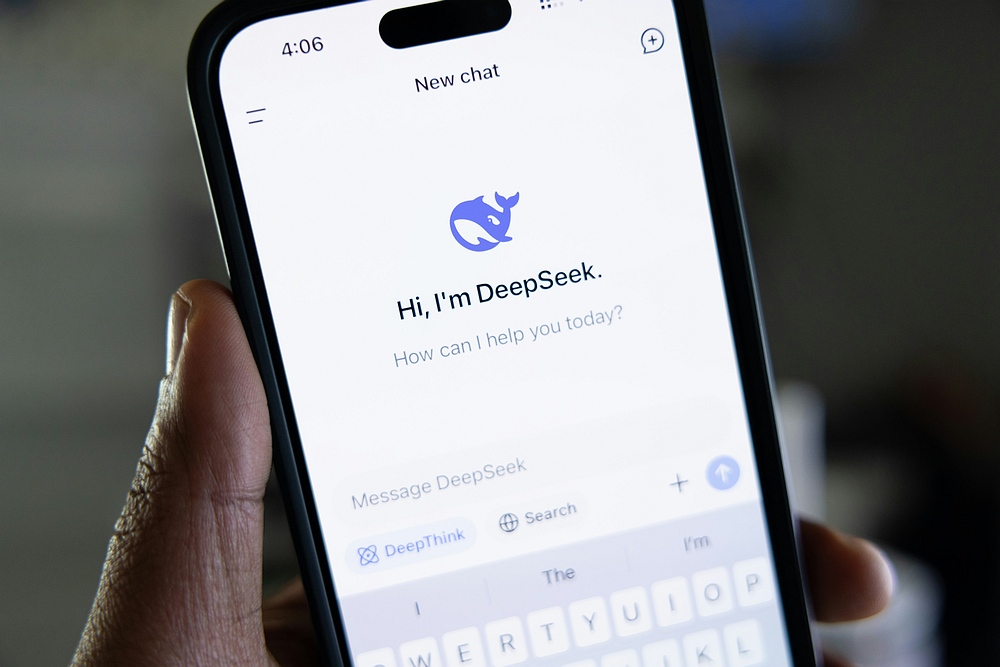

A pure question arises concerning the acceptance charge of the additionally predicted token. The FIM strategy is applied at a fee of 0.1, in keeping with the PSM framework. This focus allows the company to focus on advancing foundational AI applied sciences with out speedy business pressures. Then, in January, the company released a free chatbot app, which shortly gained popularity and rose to the highest spot in Apple’s app store. But DeepSeek also launched six "distilled" versions of R1, ranging in dimension from 1.5 billion parameters to 70 billion parameters. DeepSeek-AI has released an MIT licensed reasoning model generally known as DeepSeek-R1, which performs as nicely or higher than available reasoning fashions from closed source model providers. Now we're ready to start hosting some AI fashions. Save the file and click on on the Continue icon within the left facet-bar and you ought to be able to go. Click cancel if it asks you to check in to GitHub. To handle this, we set a maximum extension limit for every node, however this will lead to the model getting stuck in local optima. Getting accustomed to how the Slack works, partially. If you’re acquainted with this, you can skip on to the subsequent subsection.

댓글목록

등록된 댓글이 없습니다.