What’s DeepSeek, China’s aI Startup Sending Shockwaves by Means of Glo…

페이지 정보

작성자 Rhoda Laboureya… 작성일25-03-09 03:58 조회41회 댓글0건관련링크

본문

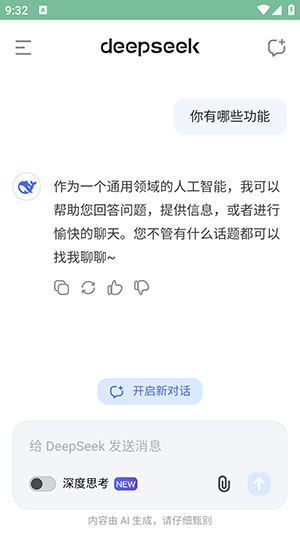

Additionally, you can use DeepSeek in English simply by speaking to it in that language. After information preparation, you should utilize the pattern shell script to finetune deepseek-ai/deepseek-coder-6.7b-instruct. This is a normal use mannequin that excels at reasoning and multi-flip conversations, with an improved give attention to longer context lengths. On Monday, Altman acknowledged that DeepSeek-R1 was "impressive" while defending his company’s focus on larger computing energy. Two former employees attributed the company’s success to Liang’s deal with extra value-efficient AI architecture. While export controls have been regarded as an vital device to ensure that main AI implementations adhere to our legal guidelines and value techniques, the success of DeepSeek underscores the restrictions of such measures when competing nations can develop and launch state-of-the-artwork fashions (somewhat) independently. It achieved a 98% success price in coding benchmarks and a perfect rating on the A-Level Pure Mathematics examination, indicating sturdy logical processing abilities.

The LLM 67B Chat model achieved a formidable 73.78% move fee on the HumanEval coding benchmark, surpassing fashions of similar size. The LLM was educated on a big dataset of 2 trillion tokens in each English and Chinese, employing architectures corresponding to LLaMA and Grouped-Query Attention. Attracting attention from world-class mathematicians in addition to machine studying researchers, the AIMO units a new benchmark for excellence in the sphere. Hermes 2 Pro is an upgraded, retrained version of Nous Hermes 2, consisting of an up to date and cleaned model of the OpenHermes 2.5 Dataset, as well as a newly introduced Function Calling and JSON Mode dataset developed in-house. 3. Specialized Versions: Different model sizes are available for various use cases, from the lighter 7B parameter mannequin to the extra highly effective 67B model. Highly Flexible & Scalable: Offered in mannequin sizes of 1B, 5.7B, 6.7B and 33B, enabling users to decide on the setup most suitable for their necessities. We activate torch.compile for batch sizes 1 to 32, the place we observed the most acceleration. We're actively collaborating with the torch.compile and torchao teams to incorporate their newest optimizations into SGLang. Benchmark outcomes present that SGLang v0.Three with MLA optimizations achieves 3x to 7x greater throughput than the baseline system.

The LLM 67B Chat model achieved a formidable 73.78% move fee on the HumanEval coding benchmark, surpassing fashions of similar size. The LLM was educated on a big dataset of 2 trillion tokens in each English and Chinese, employing architectures corresponding to LLaMA and Grouped-Query Attention. Attracting attention from world-class mathematicians in addition to machine studying researchers, the AIMO units a new benchmark for excellence in the sphere. Hermes 2 Pro is an upgraded, retrained version of Nous Hermes 2, consisting of an up to date and cleaned model of the OpenHermes 2.5 Dataset, as well as a newly introduced Function Calling and JSON Mode dataset developed in-house. 3. Specialized Versions: Different model sizes are available for various use cases, from the lighter 7B parameter mannequin to the extra highly effective 67B model. Highly Flexible & Scalable: Offered in mannequin sizes of 1B, 5.7B, 6.7B and 33B, enabling users to decide on the setup most suitable for their necessities. We activate torch.compile for batch sizes 1 to 32, the place we observed the most acceleration. We're actively collaborating with the torch.compile and torchao teams to incorporate their newest optimizations into SGLang. Benchmark outcomes present that SGLang v0.Three with MLA optimizations achieves 3x to 7x greater throughput than the baseline system.

Multi-head Latent Attention (MLA) is a new consideration variant launched by the Free DeepSeek v3 group to improve inference efficiency. The 7B mannequin utilized Multi-Head attention, whereas the 67B mannequin leveraged Grouped-Query Attention. This model was positive-tuned by Nous Research, with Teknium and Emozilla leading the nice tuning process and dataset curation, Redmond AI sponsoring the compute, and several other different contributors. Nous-Hermes-Llama2-13b is a state-of-the-artwork language mannequin fine-tuned on over 300,000 directions. As an example, the DeepSeek-V3 model was educated using approximately 2,000 Nvidia H800 chips over fifty five days, costing around $5.58 million - considerably less than comparable models from other companies. Hermes 3 is a generalist language model with many enhancements over Hermes 2, including superior agentic capabilities, much better roleplaying, reasoning, multi-turn conversation, long context coherence, and improvements throughout the board. A normal use model that offers advanced natural language understanding and era capabilities, empowering purposes with high-efficiency textual content-processing functionalities across diverse domains and languages.

How to make use of the deepseek-coder-instruct to complete the code? The outcome exhibits that DeepSeek-Coder-Base-33B significantly outperforms present open-source code LLMs. The DeepSeek-Coder-Instruct-33B model after instruction tuning outperforms GPT35-turbo on HumanEval and achieves comparable results with GPT35-turbo on MBPP. R1 is notable, nonetheless, because o1 stood alone as the one reasoning model in the marketplace, and the clearest signal that OpenAI was the market chief. And apparently the US inventory market is already selecting by dumping stocks of Nvidia chips. But lowering the entire volume of chips going into China limits the entire number of frontier models that can be trained and how extensively they can be deployed, upping the possibilities that U.S. These are the high efficiency pc chips wanted for AI. To make sure unbiased and thorough efficiency assessments, DeepSeek AI designed new problem sets, such because the Hungarian National High-School Exam and Google’s instruction following the evaluation dataset. Surprisingly, our Free DeepSeek-Coder-Base-7B reaches the efficiency of CodeLlama-34B. Step 2: Further Pre-coaching using an prolonged 16K window measurement on an extra 200B tokens, resulting in foundational fashions (DeepSeek-Coder-Base). DeepSeek’s language models, designed with architectures akin to LLaMA, underwent rigorous pre-coaching. Deepseek Coder is composed of a sequence of code language fashions, each educated from scratch on 2T tokens, with a composition of 87% code and 13% natural language in each English and Chinese.

How to make use of the deepseek-coder-instruct to complete the code? The outcome exhibits that DeepSeek-Coder-Base-33B significantly outperforms present open-source code LLMs. The DeepSeek-Coder-Instruct-33B model after instruction tuning outperforms GPT35-turbo on HumanEval and achieves comparable results with GPT35-turbo on MBPP. R1 is notable, nonetheless, because o1 stood alone as the one reasoning model in the marketplace, and the clearest signal that OpenAI was the market chief. And apparently the US inventory market is already selecting by dumping stocks of Nvidia chips. But lowering the entire volume of chips going into China limits the entire number of frontier models that can be trained and how extensively they can be deployed, upping the possibilities that U.S. These are the high efficiency pc chips wanted for AI. To make sure unbiased and thorough efficiency assessments, DeepSeek AI designed new problem sets, such because the Hungarian National High-School Exam and Google’s instruction following the evaluation dataset. Surprisingly, our Free DeepSeek-Coder-Base-7B reaches the efficiency of CodeLlama-34B. Step 2: Further Pre-coaching using an prolonged 16K window measurement on an extra 200B tokens, resulting in foundational fashions (DeepSeek-Coder-Base). DeepSeek’s language models, designed with architectures akin to LLaMA, underwent rigorous pre-coaching. Deepseek Coder is composed of a sequence of code language fashions, each educated from scratch on 2T tokens, with a composition of 87% code and 13% natural language in each English and Chinese.

댓글목록

등록된 댓글이 없습니다.