Are you able to Spot The A Deepseek Ai News Professional?

페이지 정보

작성자 Deborah 작성일25-03-04 01:10 조회7회 댓글0건관련링크

본문

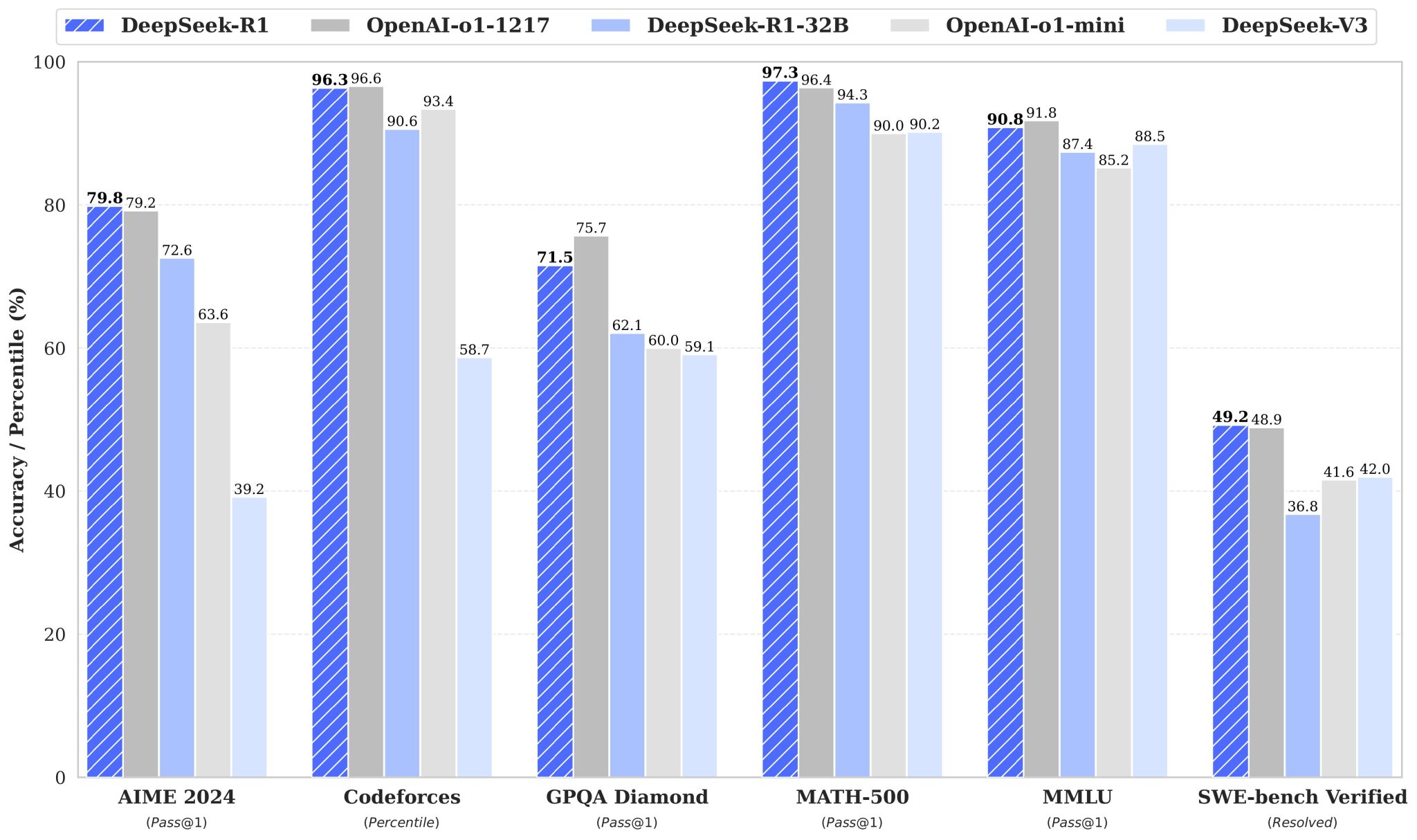

For these of you who don’t know, distillation is the method by which a big highly effective model "teaches" a smaller much less powerful mannequin with synthetic data. Token price refers to the chunk of words an AI model can course of and costs per million tokens. The corporate launched two variants of it’s Free DeepSeek v3 Chat this week: a 7B and 67B-parameter DeepSeek LLM, educated on a dataset of two trillion tokens in English and Chinese. It’s unambiguously hilarious that it’s a Chinese company doing the work OpenAI was named to do. Liu, of the Chinese Embassy, reiterated China’s stances on Taiwan, Xinjiang and Tibet. China’s DeepSeek launched an opensource mannequin that works on par with OpenAI’s newest fashions however prices a tiny fraction to function.Moreover, you can even obtain it and run it Free DeepSeek online (or the cost of your electricity) for your self. The mannequin, which preceded R1, had outscored GPT-4o, Llama 3.3-70B and Alibaba’s Qwen2.5-72B, China’s previous main AI model. India will develop its personal giant language model powered by synthetic intelligence (AI) to compete with DeepSeek and ChatGPT, Minister of Electronics and IT Ashwini Vaishnaw instructed media on Thursday. This parameter increase allows the model to be taught extra complex patterns and nuances, enhancing its language understanding and generation capabilities.

For these of you who don’t know, distillation is the method by which a big highly effective model "teaches" a smaller much less powerful mannequin with synthetic data. Token price refers to the chunk of words an AI model can course of and costs per million tokens. The corporate launched two variants of it’s Free DeepSeek v3 Chat this week: a 7B and 67B-parameter DeepSeek LLM, educated on a dataset of two trillion tokens in English and Chinese. It’s unambiguously hilarious that it’s a Chinese company doing the work OpenAI was named to do. Liu, of the Chinese Embassy, reiterated China’s stances on Taiwan, Xinjiang and Tibet. China’s DeepSeek launched an opensource mannequin that works on par with OpenAI’s newest fashions however prices a tiny fraction to function.Moreover, you can even obtain it and run it Free DeepSeek online (or the cost of your electricity) for your self. The mannequin, which preceded R1, had outscored GPT-4o, Llama 3.3-70B and Alibaba’s Qwen2.5-72B, China’s previous main AI model. India will develop its personal giant language model powered by synthetic intelligence (AI) to compete with DeepSeek and ChatGPT, Minister of Electronics and IT Ashwini Vaishnaw instructed media on Thursday. This parameter increase allows the model to be taught extra complex patterns and nuances, enhancing its language understanding and generation capabilities.

When an AI firm releases a number of models, essentially the most powerful one usually steals the highlight so let me let you know what this implies: A R1-distilled Qwen-14B-which is a 14 billion parameter mannequin, 12x smaller than GPT-three from 2020-is pretty much as good as OpenAI o1-mini and significantly better than GPT-4o or Claude Sonnet 3.5, the most effective non-reasoning fashions. In other words, DeepSeek let it work out by itself the right way to do reasoning. Let me get a bit technical right here (not a lot) to clarify the distinction between R1 and R1-Zero. We believe this warrants further exploration and subsequently present solely the results of the easy SFT-distilled models here. Reward engineering. Researchers developed a rule-primarily based reward system for the mannequin that outperforms neural reward models which can be extra generally used. Fortunately, the top model developers (including OpenAI and Google) are already concerned in cybersecurity initiatives the place non-guard-railed instances of their slicing-edge fashions are being used to push the frontier of offensive & predictive security. Did they find a approach to make these models incredibly low-cost that OpenAI and Google ignore? Are they copying Meta’s method to make the fashions a commodity? Then there are six other models created by training weaker base fashions (Qwen and Llama) on R1-distilled data.

When an AI firm releases a number of models, essentially the most powerful one usually steals the highlight so let me let you know what this implies: A R1-distilled Qwen-14B-which is a 14 billion parameter mannequin, 12x smaller than GPT-three from 2020-is pretty much as good as OpenAI o1-mini and significantly better than GPT-4o or Claude Sonnet 3.5, the most effective non-reasoning fashions. In other words, DeepSeek let it work out by itself the right way to do reasoning. Let me get a bit technical right here (not a lot) to clarify the distinction between R1 and R1-Zero. We believe this warrants further exploration and subsequently present solely the results of the easy SFT-distilled models here. Reward engineering. Researchers developed a rule-primarily based reward system for the mannequin that outperforms neural reward models which can be extra generally used. Fortunately, the top model developers (including OpenAI and Google) are already concerned in cybersecurity initiatives the place non-guard-railed instances of their slicing-edge fashions are being used to push the frontier of offensive & predictive security. Did they find a approach to make these models incredibly low-cost that OpenAI and Google ignore? Are they copying Meta’s method to make the fashions a commodity? Then there are six other models created by training weaker base fashions (Qwen and Llama) on R1-distilled data.

That’s what you usually do to get a chat mannequin (ChatGPT) from a base model (out-of-the-box GPT-4) but in a much bigger amount. It is a resource-efficient mannequin that rivals closed-supply systems like GPT-four and Claude-3.5-Sonnet. If somebody asks for "a pop star drinking" and the output seems to be like Taylor Swift, who’s accountable? For peculiar individuals such as you and that i who're merely attempting to confirm if a put up on social media was true or not, will we be able to independently vet numerous impartial sources online, or will we solely get the knowledge that the LLM supplier wants to point out us on their own platform response? Neither OpenAI, Google, nor Anthropic has given us one thing like this. Owing to its optimum use of scarce sources, DeepSeek has been pitted towards US AI powerhouse OpenAI, as it is extensively recognized for building massive language models. The title "ChatGPT" stands for "Generative Pre-skilled Transformer," which displays its underlying know-how that allows it to grasp and produce natural language.

AI evolution will doubtless produce models resembling DeepSeek which enhance technical area workflows and ChatGPT which enhances trade communication and creativity across a number of sectors. Deepseek Online chat wanted to keep SFT at a minimal. After pre-coaching, R1 was given a small quantity of excessive-high quality human examples (supervised superb-tuning, SFT). Scale CEO Alexandr Wang says the Scaling section of AI has ended, even supposing AI has "genuinely hit a wall" when it comes to pre-training, however there remains to be progress in AI with evals climbing and fashions getting smarter as a result of post-coaching and check-time compute, and we've got entered the Innovating part the place reasoning and different breakthroughs will result in superintelligence in 6 years or less. As DeepSeek reveals, considerable AI progress may be made with decrease costs, and the competitors in AI might change significantly. Talking about costs, by some means DeepSeek has managed to build R1 at 5-10% of the cost of o1 (and that’s being charitable with OpenAI’s input-output pricing).

댓글목록

등록된 댓글이 없습니다.