DeepSeek-R1 Models now Available On AWS

페이지 정보

작성자 Mckenzie 작성일25-03-03 16:47 조회7회 댓글0건관련링크

본문

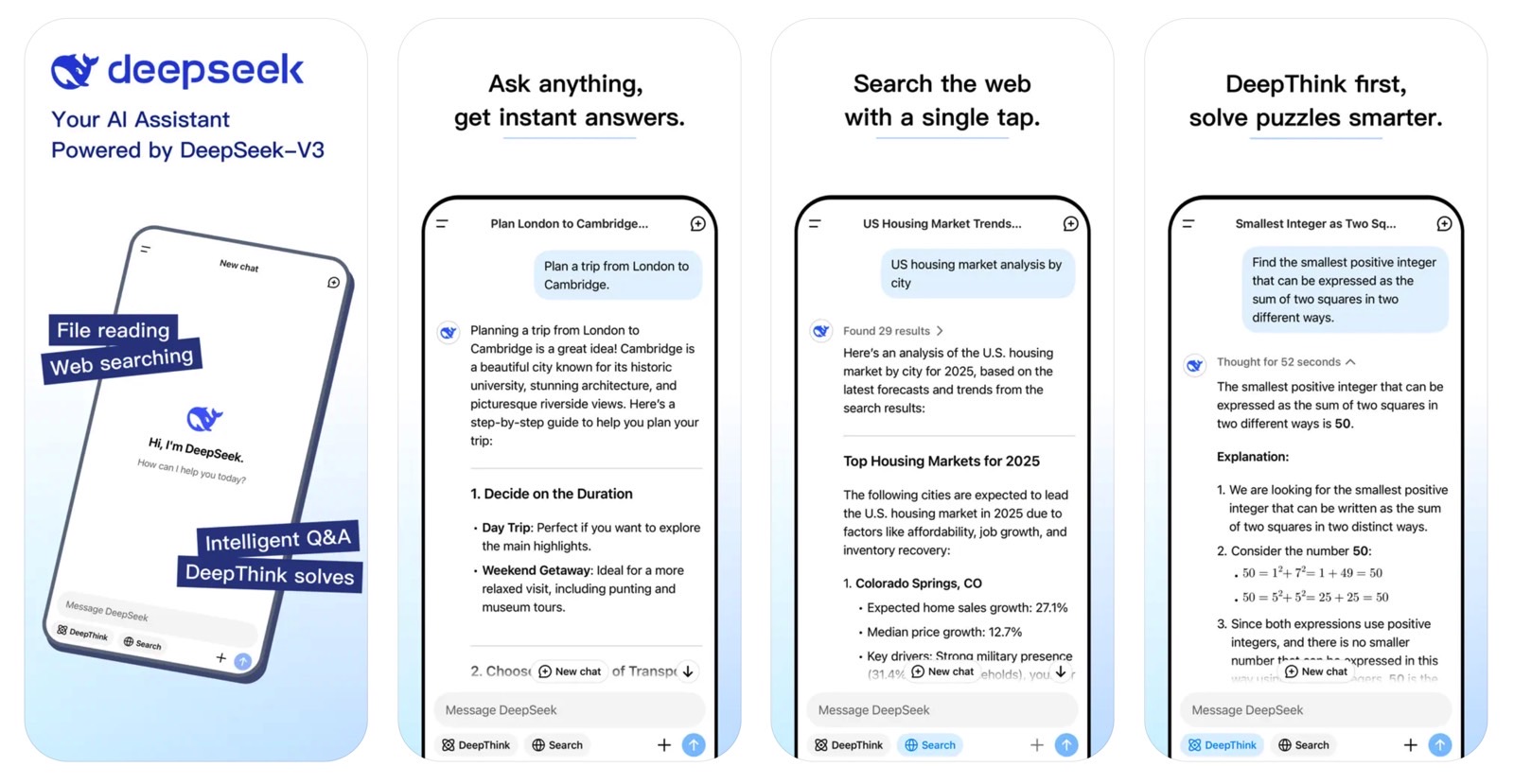

The brand new DeepSeek programme was released to the general public on January 20. By January 27, DeepSeek’s app had already hit the top of Apple’s App Store chart. DeepSeek launched Free DeepSeek-V3 on December 2024 and subsequently launched DeepSeek-R1, DeepSeek-R1-Zero with 671 billion parameters, and Deepseek free-R1-Distill models ranging from 1.5-70 billion parameters on January 20, 2025. They added their vision-based mostly Janus-Pro-7B model on January 27, 2025. The fashions are publicly out there and are reportedly 90-95% more reasonably priced and value-efficient than comparable fashions. After storing these publicly available fashions in an Amazon Simple Storage Service (Amazon S3) bucket or an Amazon SageMaker Model Registry, go to Imported fashions beneath Foundation models within the Amazon Bedrock console and import and deploy them in a fully managed and serverless surroundings through Amazon Bedrock. With Amazon Bedrock Guardrails, you possibly can independently consider consumer inputs and mannequin outputs. For extra details regarding the model architecture, please seek advice from DeepSeek-V3 repository. Limit Sharing of private Data: To reduce privacy risks, chorus from disclosing delicate info resembling your identify, handle, or confidential particulars. All cite "security concerns" about the Chinese know-how and a scarcity of readability about how users’ personal data is handled by the operator.

The brand new DeepSeek programme was released to the general public on January 20. By January 27, DeepSeek’s app had already hit the top of Apple’s App Store chart. DeepSeek launched Free DeepSeek-V3 on December 2024 and subsequently launched DeepSeek-R1, DeepSeek-R1-Zero with 671 billion parameters, and Deepseek free-R1-Distill models ranging from 1.5-70 billion parameters on January 20, 2025. They added their vision-based mostly Janus-Pro-7B model on January 27, 2025. The fashions are publicly out there and are reportedly 90-95% more reasonably priced and value-efficient than comparable fashions. After storing these publicly available fashions in an Amazon Simple Storage Service (Amazon S3) bucket or an Amazon SageMaker Model Registry, go to Imported fashions beneath Foundation models within the Amazon Bedrock console and import and deploy them in a fully managed and serverless surroundings through Amazon Bedrock. With Amazon Bedrock Guardrails, you possibly can independently consider consumer inputs and mannequin outputs. For extra details regarding the model architecture, please seek advice from DeepSeek-V3 repository. Limit Sharing of private Data: To reduce privacy risks, chorus from disclosing delicate info resembling your identify, handle, or confidential particulars. All cite "security concerns" about the Chinese know-how and a scarcity of readability about how users’ personal data is handled by the operator.

This came after Seoul’s info privateness watchdog, the personal Information Protection Commission, announced on January 31 that it would ship a written request to DeepSeek for details about how the non-public info of users is managed. More evaluation details might be discovered within the Detailed Evaluation. Instead of this, DeepSeek has discovered a manner to reduce the KV cache measurement without compromising on high quality, no less than in their inside experiments. Each model is pre-skilled on challenge-level code corpus by employing a window dimension of 16K and an extra fill-in-the-blank activity, to assist venture-degree code completion and infilling. OpenRouter routes requests to the best providers which are capable of handle your immediate size and parameters, with fallbacks to maximise uptime. Prompt AI raised $6 million for it home AI assistant. Let’s see how to create a prompt to request this from DeepSeek. The objective is to see if the model can solve the programming task with out being explicitly proven the documentation for the API replace. Once it reaches the goal nodes, we'll endeavor to ensure that it's instantaneously forwarded via NVLink to particular GPUs that host their target experts, without being blocked by subsequently arriving tokens.

This came after Seoul’s info privateness watchdog, the personal Information Protection Commission, announced on January 31 that it would ship a written request to DeepSeek for details about how the non-public info of users is managed. More evaluation details might be discovered within the Detailed Evaluation. Instead of this, DeepSeek has discovered a manner to reduce the KV cache measurement without compromising on high quality, no less than in their inside experiments. Each model is pre-skilled on challenge-level code corpus by employing a window dimension of 16K and an extra fill-in-the-blank activity, to assist venture-degree code completion and infilling. OpenRouter routes requests to the best providers which are capable of handle your immediate size and parameters, with fallbacks to maximise uptime. Prompt AI raised $6 million for it home AI assistant. Let’s see how to create a prompt to request this from DeepSeek. The objective is to see if the model can solve the programming task with out being explicitly proven the documentation for the API replace. Once it reaches the goal nodes, we'll endeavor to ensure that it's instantaneously forwarded via NVLink to particular GPUs that host their target experts, without being blocked by subsequently arriving tokens.

Efficient Parallelism:Model Parallelism (splitting giant models throughout GPUs). This paper presents a new benchmark known as CodeUpdateArena to judge how nicely massive language models (LLMs) can replace their information about evolving code APIs, a essential limitation of present approaches. Nvidia has launched NemoTron-four 340B, a household of fashions designed to generate synthetic data for coaching massive language models (LLMs). OpenSourceWeek: DeepGEMM Introducing DeepGEMM - an FP8 GEMM library that supports each dense and MoE GEMMs, powering V3/R1 coaching and inference. By leveraging an enormous quantity of math-associated net knowledge and introducing a novel optimization approach called Group Relative Policy Optimization (GRPO), the researchers have achieved impressive results on the difficult MATH benchmark. Second, the researchers introduced a new optimization technique called Group Relative Policy Optimization (GRPO), which is a variant of the effectively-recognized Proximal Policy Optimization (PPO) algorithm. With those basic ideas lined, let’s dive into GRPO. Now that we’ve calculated the benefit for all of our outputs, we are able to use that to calculate the lion’s share of the GRPO operate. Korea Hydro & Nuclear Power, which is run by the South Korean government, stated it blocked using AI companies on its workers’ gadgets together with DeepSeek final month.

This week, government businesses in nations together with South Korea and Australia have blocked entry to Chinese synthetic intelligence (AI) startup DeepSeek’s new AI chatbot programme, largely for government employees. Here’s what we find out about DeepSeek and why nations are banning it. Which countries are banning DeepSeek’s AI programme? Some government agencies in several nations are looking for or enacting bans on the AI software for his or her staff. Officials said that the government had urged ministries and agencies on Tuesday to be careful about utilizing AI programmes typically, together with ChatGPT and DeepSeek. Last month, Deepseek free made headlines after it precipitated share costs in US tech firms to plummet, after it claimed that its model would price only a fraction of the money its competitors had spent on their very own AI programmes to construct. Over the course of less than 10 hours' trading, news that China had created a greater AI mousetrap -- one which took less time and prices less cash to build and operate -- subtracted $600 billion from the market capitalization of Nvidia (NASDAQ: NVDA). On one hand, Constellation Energy stock at its trailing price-to-earnings ratio of 20.7 does not seem especially expensive.

If you liked this article and you would like to get additional information regarding Deepseek FrançAis kindly visit our web site.

댓글목록

등록된 댓글이 없습니다.