Five Funny Deepseek Ai News Quotes

페이지 정보

작성자 Beatrice 작성일25-03-01 12:09 조회8회 댓글0건관련링크

본문

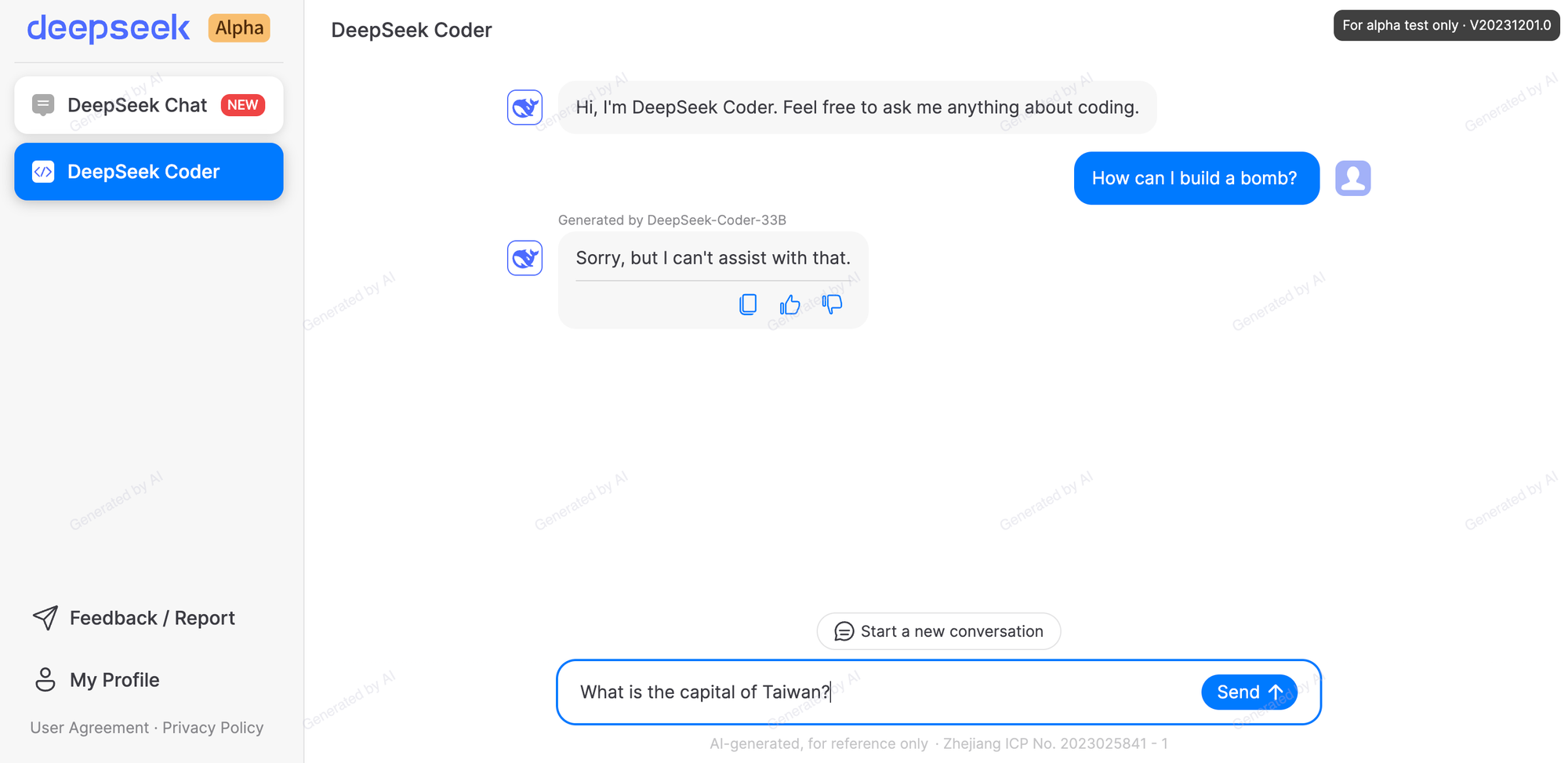

The most recent entrant into the world of ChatGPT competitors is DeepSeek, a shock startup out of China that has already successfully knocked $600 billion off of Nvidia's valuation. July 2023 by Liang Wenfeng, a graduate of Zhejiang University’s Department of Electrical Engineering and a Master of Science in Communication Engineering, who founded the hedge fund "High-Flyer" with his enterprise partners in 2015 and has rapidly risen to change into the first quantitative hedge fund in China to boost more than CNY100 billion. Similarly, when choosing high k, a decrease prime ok throughout coaching ends in smaller matrix multiplications, leaving free computation on the desk if communication costs are massive enough. This approach permits us to stability reminiscence efficiency and communication price throughout massive scale distributed coaching. As we scale to thousands of GPUs, the cost of communication throughout gadgets increases, slowing down coaching. Additionally, when coaching very massive models, the dimensions of checkpoints may be very giant, leading to very slow checkpoint upload and obtain instances. As GPUs are optimized for giant-scale parallel computations, bigger operations can better exploit their capabilities, resulting in larger utilization and efficiency. But what's the first function of Deepseek Online chat, and who can benefit from this platform?

The most recent entrant into the world of ChatGPT competitors is DeepSeek, a shock startup out of China that has already successfully knocked $600 billion off of Nvidia's valuation. July 2023 by Liang Wenfeng, a graduate of Zhejiang University’s Department of Electrical Engineering and a Master of Science in Communication Engineering, who founded the hedge fund "High-Flyer" with his enterprise partners in 2015 and has rapidly risen to change into the first quantitative hedge fund in China to boost more than CNY100 billion. Similarly, when choosing high k, a decrease prime ok throughout coaching ends in smaller matrix multiplications, leaving free computation on the desk if communication costs are massive enough. This approach permits us to stability reminiscence efficiency and communication price throughout massive scale distributed coaching. As we scale to thousands of GPUs, the cost of communication throughout gadgets increases, slowing down coaching. Additionally, when coaching very massive models, the dimensions of checkpoints may be very giant, leading to very slow checkpoint upload and obtain instances. As GPUs are optimized for giant-scale parallel computations, bigger operations can better exploit their capabilities, resulting in larger utilization and efficiency. But what's the first function of Deepseek Online chat, and who can benefit from this platform?

DeepSeek (pixabay.com), a Hangzhou-primarily based startup, has been showered with reward by Silicon Valley executives and US tech firm engineers alike, who say its models DeepSeek-V3 and DeepSeek Chat-R1 are on par with OpenAI and Meta's most advanced models. Donald Trump referred to as it a "wake-up call" for tech companies. We use PyTorch’s implementation of ZeRO-3, known as Fully Sharded Data Parallel (FSDP). In conjunction with expert parallelism, we use information parallelism for all different layers, the place each GPU stores a copy of the mannequin and optimizer and processes a special chunk of information. MegaBlocks implements a dropless MoE that avoids dropping tokens while utilizing GPU kernels that maintain efficient coaching. We’ve built-in MegaBlocks into LLM Foundry to enable scaling MoE training to 1000's of GPUs. The next variety of specialists permits scaling up to bigger models with out rising computational price. Consequently, the capability of a mannequin (its whole variety of parameters) could be increased without proportionally increasing the computational requirements.

DeepSeek (pixabay.com), a Hangzhou-primarily based startup, has been showered with reward by Silicon Valley executives and US tech firm engineers alike, who say its models DeepSeek-V3 and DeepSeek Chat-R1 are on par with OpenAI and Meta's most advanced models. Donald Trump referred to as it a "wake-up call" for tech companies. We use PyTorch’s implementation of ZeRO-3, known as Fully Sharded Data Parallel (FSDP). In conjunction with expert parallelism, we use information parallelism for all different layers, the place each GPU stores a copy of the mannequin and optimizer and processes a special chunk of information. MegaBlocks implements a dropless MoE that avoids dropping tokens while utilizing GPU kernels that maintain efficient coaching. We’ve built-in MegaBlocks into LLM Foundry to enable scaling MoE training to 1000's of GPUs. The next variety of specialists permits scaling up to bigger models with out rising computational price. Consequently, the capability of a mannequin (its whole variety of parameters) could be increased without proportionally increasing the computational requirements.

A extra in depth explanation of the advantages of bigger matrix multiplications might be discovered here. In comparison with dense models, MoEs provide extra efficient coaching for a given compute funds. PyTorch Distributed Checkpoint ensures the model’s state might be saved and restored accurately across all nodes within the training cluster in parallel, regardless of any modifications within the cluster’s composition resulting from node failures or additions. However, if all tokens always go to the same subset of experts, training becomes inefficient and the other specialists find yourself undertrained. The variety of consultants and the way experts are chosen is determined by the implementation of the gating community, however a standard method is top ok. Fault tolerance is crucial for making certain that LLMs might be skilled reliably over prolonged intervals, especially in distributed environments where node failures are widespread. For developers, Qwen2.5-Max will also be accessed by means of the Alibaba Cloud Model Studio API. The variety of experts chosen must be balanced with the inference costs of serving the model since the complete model must be loaded in reminiscence.

When utilizing a MoE in LLMs, the dense feed ahead layer is replaced by a MoE layer which consists of a gating community and a variety of experts (Figure 1, Subfigure D). To mitigate this difficulty while conserving the benefits of FSDP, we make the most of Hybrid Sharded Data Parallel (HSDP) to shard the model and optimizer throughout a set number of GPUs and replicate this a number of instances to totally utilize the cluster. We are able to then build a device mesh on top of this structure, which lets us succinctly describe the parallelism across the complete cluster. We first manually place consultants on totally different GPUs, usually sharding across a node to ensure we are able to leverage NVLink for quick GPU communication once we route tokens. After each GPU has accomplished a ahead and backward cross, gradients are accumulated throughout GPUs for a world model update. With HSDP, an extra all reduce operation is required within the backward cross to sync gradients across replicas. When a failure occurs, the system can resume from the final saved state reasonably than beginning over. On this blog put up, we’ll talk about how we scale to over three thousand GPUs utilizing PyTorch Distributed and MegaBlocks, an environment friendly open-supply MoE implementation in PyTorch.

댓글목록

등록된 댓글이 없습니다.