Take 10 Minutes to Get Began With Deepseek

페이지 정보

작성자 Dario 작성일25-02-27 06:21 조회8회 댓글0건관련링크

본문

DeepSeek is an open-supply massive language model (LLM) venture that emphasizes resource-efficient AI development while maintaining reducing-edge performance. Although the DeepSeek R1 model was launched recently, some trusted LLM hosting platforms assist it. You may as well pull and run the following distilled Qwen and Llama variations of the DeepSeek Ai Chat R1 mannequin. Compressor abstract: This paper introduces Bode, a wonderful-tuned LLaMA 2-primarily based model for Portuguese NLP tasks, which performs higher than current LLMs and is freely out there. Whether you’re a researcher, developer, or AI enthusiast, understanding DeepSeek is essential as it opens up new potentialities in pure language processing (NLP), search capabilities, and AI-driven functions. Integration into present functions via API. Easy Integration: Simple API integration and comprehensive documentation. We provide complete documentation and examples to help you get began. Visit the Azure AI Foundry webpage to get started. Because the preview above reveals, you can access distilled versions of DeepSeek R1 on Microsoft’s Aure AI Foundry. The preview under demonstrates the best way to run the DeepSeek-R1-Distill-Llama-8B with Ollama. Ollama Local LLM Tool on YouTube for a quick walkthrough. We will update the article occasionally because the number of local LLM tools assist increases for R1. On the time of writing this text, the DeepSeek R1 mannequin is accessible on trusted LLM internet hosting platforms like Azure AI Foundry and Groq.

Microsoft not too long ago made the R1 mannequin and the distilled versions accessible on its Azure AI Foundry and GitHub. Personal projects leveraging a robust language mannequin. The important thing contributions of the paper embrace a novel strategy to leveraging proof assistant suggestions and advancements in reinforcement studying and search algorithms for theorem proving. To deal with this challenge, researchers from DeepSeek, Sun Yat-sen University, University of Edinburgh, and MBZUAI have developed a novel strategy to generate large datasets of synthetic proof knowledge. Synthetic data isn’t a whole answer to finding extra training knowledge, however it’s a promising strategy. When utilizing LLMs like ChatGPT or Claude, you are utilizing fashions hosted by OpenAI and Anthropic, so your prompts and knowledge could also be collected by these suppliers for training and enhancing the capabilities of their fashions. Within the official DeepSeek net/app, we do not use system prompts but design two particular prompts for file upload and net search for better person expertise. Compressor summary: The paper introduces a brand new community referred to as TSP-RDANet that divides picture denoising into two phases and uses completely different consideration mechanisms to study vital options and suppress irrelevant ones, reaching better performance than existing methods. Using Jan to run DeepSeek R1 requires only the three steps illustrated within the picture beneath.

Microsoft not too long ago made the R1 mannequin and the distilled versions accessible on its Azure AI Foundry and GitHub. Personal projects leveraging a robust language mannequin. The important thing contributions of the paper embrace a novel strategy to leveraging proof assistant suggestions and advancements in reinforcement studying and search algorithms for theorem proving. To deal with this challenge, researchers from DeepSeek, Sun Yat-sen University, University of Edinburgh, and MBZUAI have developed a novel strategy to generate large datasets of synthetic proof knowledge. Synthetic data isn’t a whole answer to finding extra training knowledge, however it’s a promising strategy. When utilizing LLMs like ChatGPT or Claude, you are utilizing fashions hosted by OpenAI and Anthropic, so your prompts and knowledge could also be collected by these suppliers for training and enhancing the capabilities of their fashions. Within the official DeepSeek net/app, we do not use system prompts but design two particular prompts for file upload and net search for better person expertise. Compressor summary: The paper introduces a brand new community referred to as TSP-RDANet that divides picture denoising into two phases and uses completely different consideration mechanisms to study vital options and suppress irrelevant ones, reaching better performance than existing methods. Using Jan to run DeepSeek R1 requires only the three steps illustrated within the picture beneath.

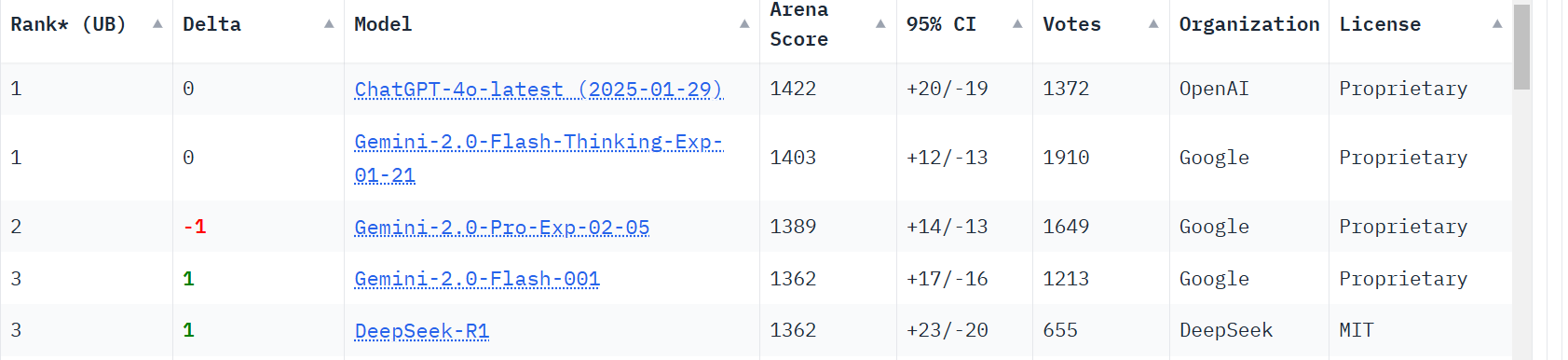

Using Ollama, you can run the DeepSeek R1 mannequin 100% and not using a network utilizing a single command. An area-first LLM tool is a instrument that permits you to talk and test models without using a community. Using the fashions through these platforms is an efficient various to utilizing them straight by means of the DeepSeek Chat and APIs. Using instruments like LMStudio, Ollama, and Jan, you possibly can chat with any model you want, for example, the DeepSeek v3 R1 model 100% offline. A Swiss church carried out a two-month experiment using an AI-powered Jesus avatar in a confessional booth, permitting over 1,000 individuals to interact with it in varied languages. This latest evaluation incorporates over 180 models! We perform an experimental analysis on a number of generative duties, specifically summarization and a new activity of summary expansion. So, for instance, a $1M mannequin might remedy 20% of vital coding tasks, a $10M would possibly remedy 40%, $100M might solve 60%, and so on. Since the discharge of the DeepSeek R1 mannequin, there have been an increasing number of native LLM platforms to download and use the mannequin without connecting to the Internet.

Local Installation: Run DeepSeek-V3 locally with the open-source implementation. "Reinforcement studying is notoriously tough, and small implementation variations can lead to major performance gaps," says Elie Bakouch, an AI research engineer at HuggingFace. Yes, DeepSeek-V3 could be easily built-in into current purposes by our API or by using the open-supply implementation. In this article, you discovered tips on how to run the DeepSeek R1 model offline using local-first LLM instruments similar to LMStudio, Ollama, and Jan. You additionally discovered how to make use of scalable, and enterprise-prepared LLM hosting platforms to run the model. There could also be a number of LLM hosting platforms lacking from these acknowledged right here. Already, DeepSeek’s success might sign one other new wave of Chinese technology improvement below a joint "private-public" banner of indigenous innovation. And that’s if you’re paying DeepSeek’s API fees. Is DeepSeek-V3 really free for commercial use? It is completely free for both personal and industrial functions, providing full access to the supply code on GitHub.

If you loved this article therefore you would like to receive more info concerning Deepseek AI Online chat kindly visit our own web page.

댓글목록

등록된 댓글이 없습니다.