Does Deepseek Sometimes Make You Feel Stupid?

페이지 정보

작성자 Adriene 작성일25-02-27 00:10 조회46회 댓글0건관련링크

본문

DeepSeek Coder is composed of a sequence of code language models, every educated from scratch on 2T tokens, with a composition of 87% code and 13% natural language in each English and Chinese. Given the United States’ comparative advantages in compute access and chopping-edge fashions, the incoming administration could discover the time to be right to money in and put AI export globally at the center of Trump’s tech coverage. The arrogance in this assertion is just surpassed by the futility: right here we're six years later, and your complete world has access to the weights of a dramatically superior mannequin. If you're starting from scratch, begin here. This introduced a full analysis run down to simply hours. Sometimes, it skipped the preliminary full response entirely and defaulted to that answer. We began building DevQualityEval with initial support for OpenRouter as a result of it affords a huge, ever-growing choice of fashions to question through one single API.

DeepSeek Coder is composed of a sequence of code language models, every educated from scratch on 2T tokens, with a composition of 87% code and 13% natural language in each English and Chinese. Given the United States’ comparative advantages in compute access and chopping-edge fashions, the incoming administration could discover the time to be right to money in and put AI export globally at the center of Trump’s tech coverage. The arrogance in this assertion is just surpassed by the futility: right here we're six years later, and your complete world has access to the weights of a dramatically superior mannequin. If you're starting from scratch, begin here. This introduced a full analysis run down to simply hours. Sometimes, it skipped the preliminary full response entirely and defaulted to that answer. We began building DevQualityEval with initial support for OpenRouter as a result of it affords a huge, ever-growing choice of fashions to question through one single API.

1.9s. All of this may appear fairly speedy at first, however benchmarking just seventy five fashions, with 48 instances and 5 runs each at 12 seconds per process would take us roughly 60 hours - or over 2 days with a single course of on a single host. Additionally, this benchmark shows that we are not but parallelizing runs of particular person models. Chain-of-thought models are inclined to perform higher on certain benchmarks akin to MMLU, which checks each data and drawback-solving in 57 topics. Giving LLMs extra room to be "creative" on the subject of writing exams comes with multiple pitfalls when executing assessments. "It is the primary open research to validate that reasoning capabilities of LLMs might be incentivized purely by way of RL, without the necessity for SFT," DeepSeek online researchers detailed. Such exceptions require the primary choice (catching the exception and passing) for the reason that exception is a part of the API’s conduct. From a builders point-of-view the latter option (not catching the exception and failing) is preferable, since a NullPointerException is often not wished and the check due to this fact factors to a bug. Provide a passing take a look at through the use of e.g. Assertions.assertThrows to catch the exception.

1.9s. All of this may appear fairly speedy at first, however benchmarking just seventy five fashions, with 48 instances and 5 runs each at 12 seconds per process would take us roughly 60 hours - or over 2 days with a single course of on a single host. Additionally, this benchmark shows that we are not but parallelizing runs of particular person models. Chain-of-thought models are inclined to perform higher on certain benchmarks akin to MMLU, which checks each data and drawback-solving in 57 topics. Giving LLMs extra room to be "creative" on the subject of writing exams comes with multiple pitfalls when executing assessments. "It is the primary open research to validate that reasoning capabilities of LLMs might be incentivized purely by way of RL, without the necessity for SFT," DeepSeek online researchers detailed. Such exceptions require the primary choice (catching the exception and passing) for the reason that exception is a part of the API’s conduct. From a builders point-of-view the latter option (not catching the exception and failing) is preferable, since a NullPointerException is often not wished and the check due to this fact factors to a bug. Provide a passing take a look at through the use of e.g. Assertions.assertThrows to catch the exception.

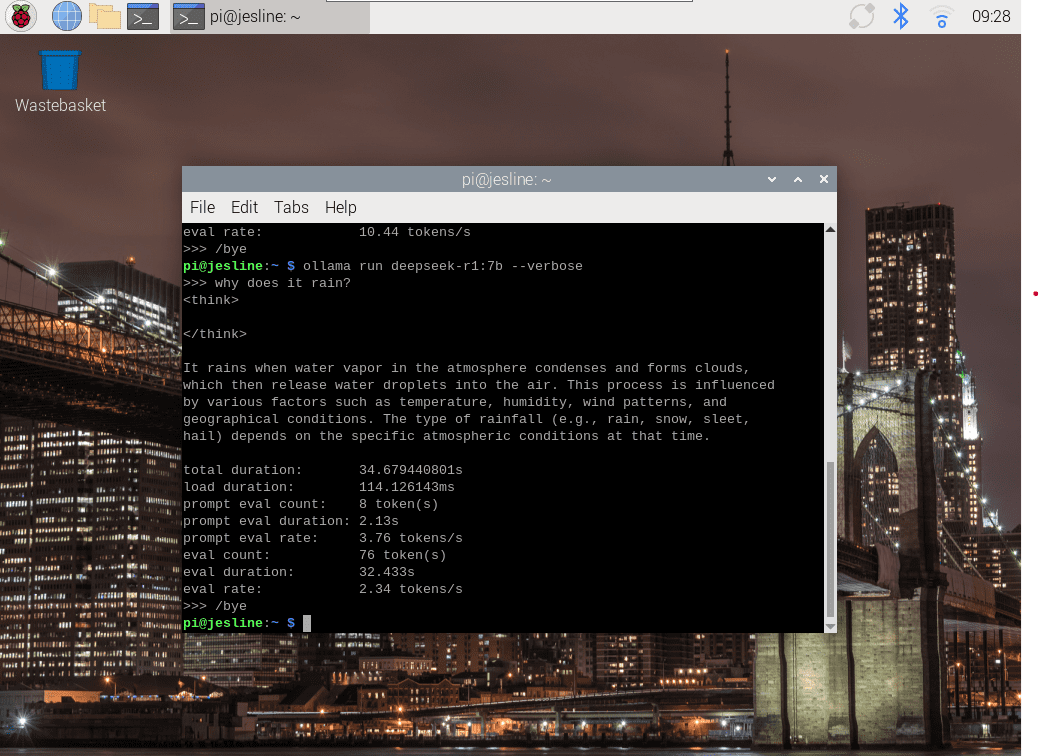

This turns into crucial when workers are using unauthorized third-celebration LLMs. We subsequently added a new model provider to the eval which allows us to benchmark LLMs from any OpenAI API suitable endpoint, that enabled us to e.g. benchmark gpt-4o immediately by way of the OpenAI inference endpoint before it was even added to OpenRouter. That is why we added assist for Ollama, a software for running LLMs locally. Blocking an robotically working check suite for guide enter ought to be clearly scored as unhealthy code. The check circumstances took roughly 15 minutes to execute and produced 44G of log information. For faster progress we opted to apply very strict and low timeouts for check execution, since all newly launched cases mustn't require timeouts. A check that runs into a timeout, is subsequently simply a failing test. These examples show that the evaluation of a failing check depends not just on the point of view (evaluation vs consumer) but in addition on the used language (compare this section with panics in Go). As a software developer we'd by no means commit a failing test into manufacturing.

The second hurdle was to at all times receive coverage for failing checks, which isn't the default for all coverage instruments. Using customary programming language tooling to run test suites and receive their coverage (Maven and OpenClover for Java, gotestsum for Go) with default choices, leads to an unsuccessful exit status when a failing test is invoked as well as no coverage reported. However, throughout improvement, when we're most keen to apply a model’s outcome, a failing check may mean progress. Provide a failing take a look at by simply triggering the path with the exception. Another example, generated by Openchat, presents a take a look at case with two for loops with an excessive quantity of iterations. However, Deepseek Online chat we observed two downsides of relying fully on OpenRouter: Although there may be normally just a small delay between a brand new release of a mannequin and the availability on OpenRouter, it nonetheless generally takes a day or two. Since Go panics are fatal, they aren't caught in testing tools, i.e. the check suite execution is abruptly stopped and there is no such thing as a coverage. By the way in which, is there any particular use case in your mind?

Should you have virtually any queries relating to where by as well as the best way to employ Deepseek Online chat, you are able to e mail us in our web site.

댓글목록

등록된 댓글이 없습니다.