The Deepseek Game

페이지 정보

작성자 Dani 작성일25-02-07 06:00 조회11회 댓글0건관련링크

본문

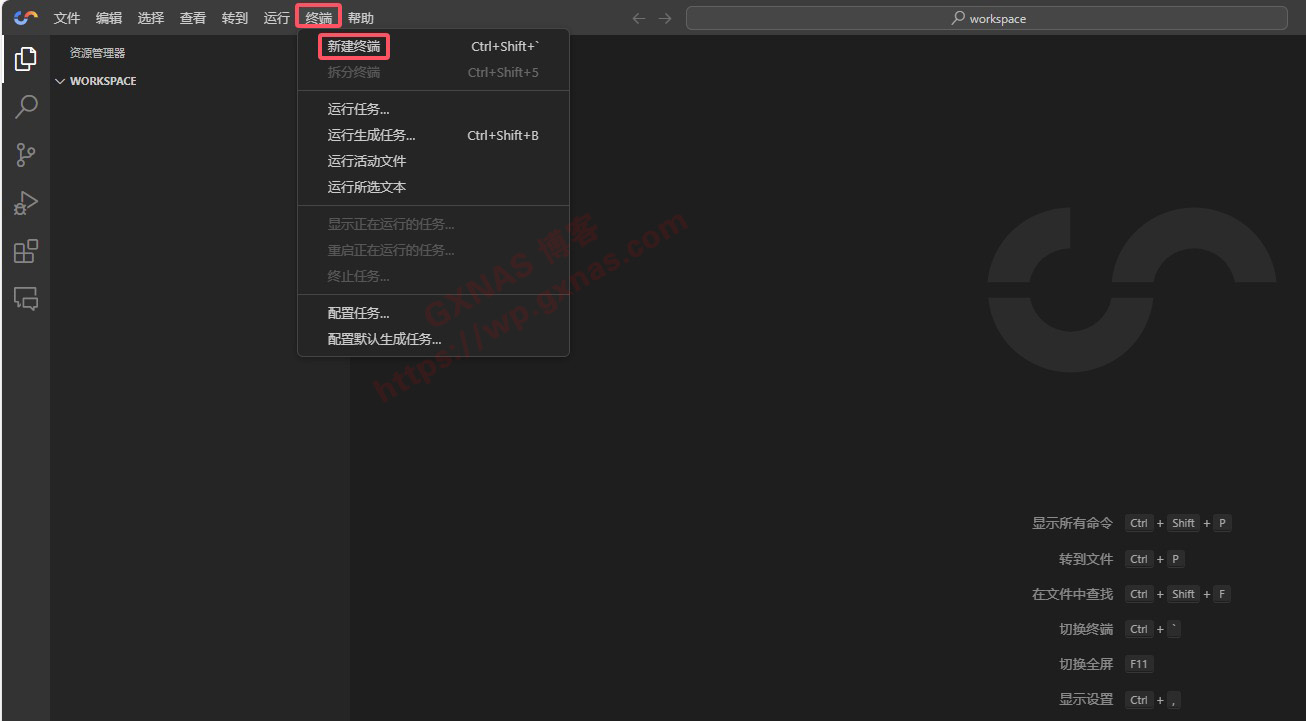

1. Click the DeepSeek icon within the Activity Bar. Quick access: Open the webview with a single click on from the standing bar or command palette. And because they're open source, information scientists worldwide can obtain it and check for themselves, and they're all saying it's 10 occasions extra efficient than what we had previously. Smaller, specialized models skilled on high-quality knowledge can outperform larger, normal-objective models on specific tasks. All of this is fascinating because your entire premise of an arms race for AI, with NVIDIA offering high-finish GPUs and all the hyperscalers building large information centers, is that you simply would wish huge quantities of computing power because of the inefficiency of LLM inference. • Transporting data between RDMA buffers (registered GPU reminiscence regions) and input/output buffers. • The mannequin undergoes RL for reasoning, similar to R1-Zero, but with an added reward perform element for language consistency. Additionally, users can download the model weights for native deployment, making certain flexibility and control over its implementation. The CodeUpdateArena benchmark is designed to check how well LLMs can update their very own data to sustain with these real-world adjustments. It's also possible to make use of vLLM for top-throughput inference.

1. Click the DeepSeek icon within the Activity Bar. Quick access: Open the webview with a single click on from the standing bar or command palette. And because they're open source, information scientists worldwide can obtain it and check for themselves, and they're all saying it's 10 occasions extra efficient than what we had previously. Smaller, specialized models skilled on high-quality knowledge can outperform larger, normal-objective models on specific tasks. All of this is fascinating because your entire premise of an arms race for AI, with NVIDIA offering high-finish GPUs and all the hyperscalers building large information centers, is that you simply would wish huge quantities of computing power because of the inefficiency of LLM inference. • Transporting data between RDMA buffers (registered GPU reminiscence regions) and input/output buffers. • The mannequin undergoes RL for reasoning, similar to R1-Zero, but with an added reward perform element for language consistency. Additionally, users can download the model weights for native deployment, making certain flexibility and control over its implementation. The CodeUpdateArena benchmark is designed to check how well LLMs can update their very own data to sustain with these real-world adjustments. It's also possible to make use of vLLM for top-throughput inference.

The integration of previous models into this unified version not solely enhances performance but additionally aligns more successfully with person preferences than earlier iterations or competing models like GPT-4o and Claude 3.5 Sonnet. DeepSeek 2.5: How does it compare to Claude 3.5 Sonnet and GPT-4o? Established in 2023 and based in Hangzhou, Zhejiang, DeepSeek has gained attention for creating advanced AI fashions that rival these of main tech corporations. Our principle of maintaining the causal chain of predictions is just like that of EAGLE (Li et al., 2024b), however its major objective is speculative decoding (Xia et al., 2023; Leviathan et al., 2023), whereas we utilize MTP to improve coaching. To additional investigate the correlation between this flexibility and the advantage in mannequin efficiency, we moreover design and validate a batch-smart auxiliary loss that encourages load stability on each training batch as an alternative of on every sequence. DeepSeek LLM 67B Chat had already demonstrated important efficiency, approaching that of GPT-4. First, DeepSeek's method potentially exposes what Clayton Christensen would name "overshoot" in present giant language fashions (LLM) from companies like OpenAI, Anthropic, and Google. As a consequence of DeepSeek's Content Security Policy (CSP), this extension may not work after restarting the editor.

Think of H800 as a low cost GPU because to be able to honor the export management policy set by the US, Nvidia made some GPUs particularly for China. Follow the offered installation directions to set up the surroundings on your local machine. Running the applying: Once put in and configured, execute the appliance utilizing the command line or an built-in improvement environment (IDE) as specified in the user guide. Configuration: Configure the application as per the documentation, which can contain setting setting variables, configuring paths, and adjusting settings to optimize efficiency. However I do think a setting is completely different, in that folks won't understand they have alternatives or how to vary it, most individuals actually by no means change any settings ever. Think of how YouTube disrupted conventional television - while initially offering decrease-quality content material, its accessibility and zero value to shoppers revolutionized video consumption. What makes this fascinating is how it challenges our assumptions about the required scale and cost of superior AI fashions.

While they have not yet succeeded with full organs, these new strategies are serving to scientists step by step scale up from small tissue samples to bigger structures. Trained on 14.8 trillion diverse tokens and incorporating advanced techniques like Multi-Token Prediction, DeepSeek v3 sets new requirements in AI language modeling. This is due to some normal optimizations like Mixture of Experts (though their implementation is finer-grained than ordinary) and some newer ones like Multi-Token Prediction - but mostly as a result of they fastened everything making their runs sluggish. Acess to talk.deepseek will not be working in the intervening time as a result of CSP. We're actively working on a solution. I imagine that OpenAI remains to be the most effective resolution. OpenAI o3-mini gives both free and premium access, with sure options reserved for paid users. Their newest O3 model demonstrates continued innovation, with features like Deep Research (obtainable to $200 professional subscribers) exhibiting spectacular capabilities. What is Deep Seek? DeepSeek AI is redefining the prospects of open-supply AI, providing highly effective instruments that are not only accessible but in addition rival the industry's main closed-supply solutions. DeepSeek undoubtedly opens up potentialities for users looking for extra reasonably priced, environment friendly options while premium providers maintain their worth proposition. Right Sidebar Integration: The webview opens in the correct sidebar by default for easy access while coding.

If you have any queries concerning in which and how to use ديب سيك شات, you can contact us at our own page.

댓글목록

등록된 댓글이 없습니다.