Nine Cut-Throat Deepseek Tactics That Never Fails

페이지 정보

작성자 Evelyne 작성일25-02-22 23:25 조회13회 댓글0건관련링크

본문

Free DeepSeek r1 mannequin perform task across multiple domains. By utilizing strategies like skilled segmentation, shared specialists, and auxiliary loss terms, DeepSeekMoE enhances model efficiency to deliver unparalleled outcomes. AlphaCodeium paper - Google revealed AlphaCode and AlphaCode2 which did very effectively on programming issues, but here is a technique Flow Engineering can add much more efficiency to any given base model. Self-Attention Mechanism: Helps the mannequin concentrate on important phrases in a given context. As a part of the personal preview, we'll deal with offering entry inline with our product rules of ease, effectivity and belief. Keeping every little thing on your gadget ensures your knowledge stays non-public and safe. 3. Supervised finetuning (SFT): 2B tokens of instruction information. API Flexibility: DeepSeek R1’s API helps superior options like chain-of-thought reasoning and lengthy-context handling (as much as 128K tokens)212. OpenAI Realtime API: The Missing Manual - Again, frontier omnimodel work just isn't printed, but we did our greatest to document the Realtime API. Early fusion analysis: Contra a budget "late fusion" work like LLaVA (our pod), early fusion covers Meta’s Flamingo, Chameleon, Apple’s AIMv2, Reka Core, et al. CodeGen is one other discipline where much of the frontier has moved from research to trade and practical engineering advice on codegen and code brokers like Devin are solely present in trade blogposts and talks moderately than analysis papers.

Free DeepSeek r1 mannequin perform task across multiple domains. By utilizing strategies like skilled segmentation, shared specialists, and auxiliary loss terms, DeepSeekMoE enhances model efficiency to deliver unparalleled outcomes. AlphaCodeium paper - Google revealed AlphaCode and AlphaCode2 which did very effectively on programming issues, but here is a technique Flow Engineering can add much more efficiency to any given base model. Self-Attention Mechanism: Helps the mannequin concentrate on important phrases in a given context. As a part of the personal preview, we'll deal with offering entry inline with our product rules of ease, effectivity and belief. Keeping every little thing on your gadget ensures your knowledge stays non-public and safe. 3. Supervised finetuning (SFT): 2B tokens of instruction information. API Flexibility: DeepSeek R1’s API helps superior options like chain-of-thought reasoning and lengthy-context handling (as much as 128K tokens)212. OpenAI Realtime API: The Missing Manual - Again, frontier omnimodel work just isn't printed, but we did our greatest to document the Realtime API. Early fusion analysis: Contra a budget "late fusion" work like LLaVA (our pod), early fusion covers Meta’s Flamingo, Chameleon, Apple’s AIMv2, Reka Core, et al. CodeGen is one other discipline where much of the frontier has moved from research to trade and practical engineering advice on codegen and code brokers like Devin are solely present in trade blogposts and talks moderately than analysis papers.

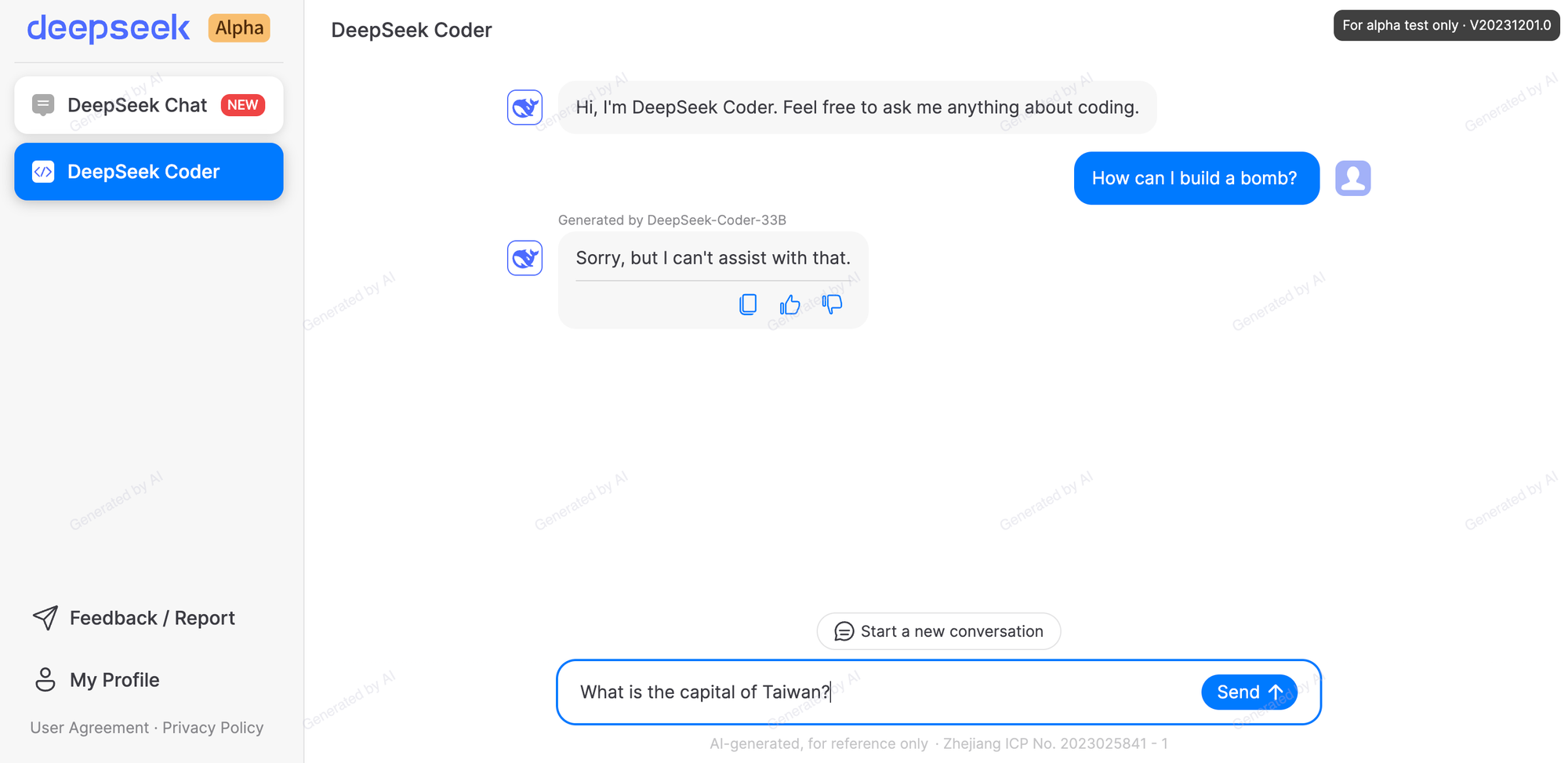

This is clearly an endlessly Deep seek rabbit gap that, on the excessive, overlaps with the Research Scientist observe. Ascend HiFloat8 format for deep learning. But, apparently, reinforcement studying had an enormous impact on the reasoning mannequin, R1 - its impact on benchmark efficiency is notable. Voyager paper - Nvidia’s take on 3 cognitive architecture elements (curriculum, skill library, sandbox) to enhance performance. More abstractly, talent library/curriculum may be abstracted as a type of Agent Workflow Memory. The latest model, Deepseek Coder V2, is even more superior and user-friendly. Whisper v2, v3 and distil-whisper and v3 Turbo are open weights but have no paper. This makes its models accessible to smaller companies and builders who could not have the sources to spend money on expensive proprietary options. LoRA/QLoRA paper - the de facto way to finetune fashions cheaply, whether on native models or with 4o (confirmed on pod). Consistency Models paper - this distillation work with LCMs spawned the short draw viral second of Dec 2023. Lately, updated with sCMs. The Stack paper - the original open dataset twin of The Pile focused on code, beginning an amazing lineage of open codegen work from The Stack v2 to StarCoder. Open Code Model papers - choose from DeepSeek-Coder, Qwen2.5-Coder, or CodeLlama.

This is clearly an endlessly Deep seek rabbit gap that, on the excessive, overlaps with the Research Scientist observe. Ascend HiFloat8 format for deep learning. But, apparently, reinforcement studying had an enormous impact on the reasoning mannequin, R1 - its impact on benchmark efficiency is notable. Voyager paper - Nvidia’s take on 3 cognitive architecture elements (curriculum, skill library, sandbox) to enhance performance. More abstractly, talent library/curriculum may be abstracted as a type of Agent Workflow Memory. The latest model, Deepseek Coder V2, is even more superior and user-friendly. Whisper v2, v3 and distil-whisper and v3 Turbo are open weights but have no paper. This makes its models accessible to smaller companies and builders who could not have the sources to spend money on expensive proprietary options. LoRA/QLoRA paper - the de facto way to finetune fashions cheaply, whether on native models or with 4o (confirmed on pod). Consistency Models paper - this distillation work with LCMs spawned the short draw viral second of Dec 2023. Lately, updated with sCMs. The Stack paper - the original open dataset twin of The Pile focused on code, beginning an amazing lineage of open codegen work from The Stack v2 to StarCoder. Open Code Model papers - choose from DeepSeek-Coder, Qwen2.5-Coder, or CodeLlama.

Kyutai Moshi paper - an impressive full-duplex speech-textual content open weights model with high profile demo. Segment Anything Model and SAM 2 paper (our pod) - the very profitable picture and video segmentation basis mannequin. Imagen / Imagen 2 / Imagen 3 paper - Google’s picture gen. See additionally Ideogram. Text Diffusion, Music Diffusion, and autoregressive image generation are area of interest however rising.

댓글목록

등록된 댓글이 없습니다.