Top 3 Lessons About Deepseek To Learn Before You Hit 30

페이지 정보

작성자 Wanda 작성일25-02-01 09:54 조회5회 댓글0건관련링크

본문

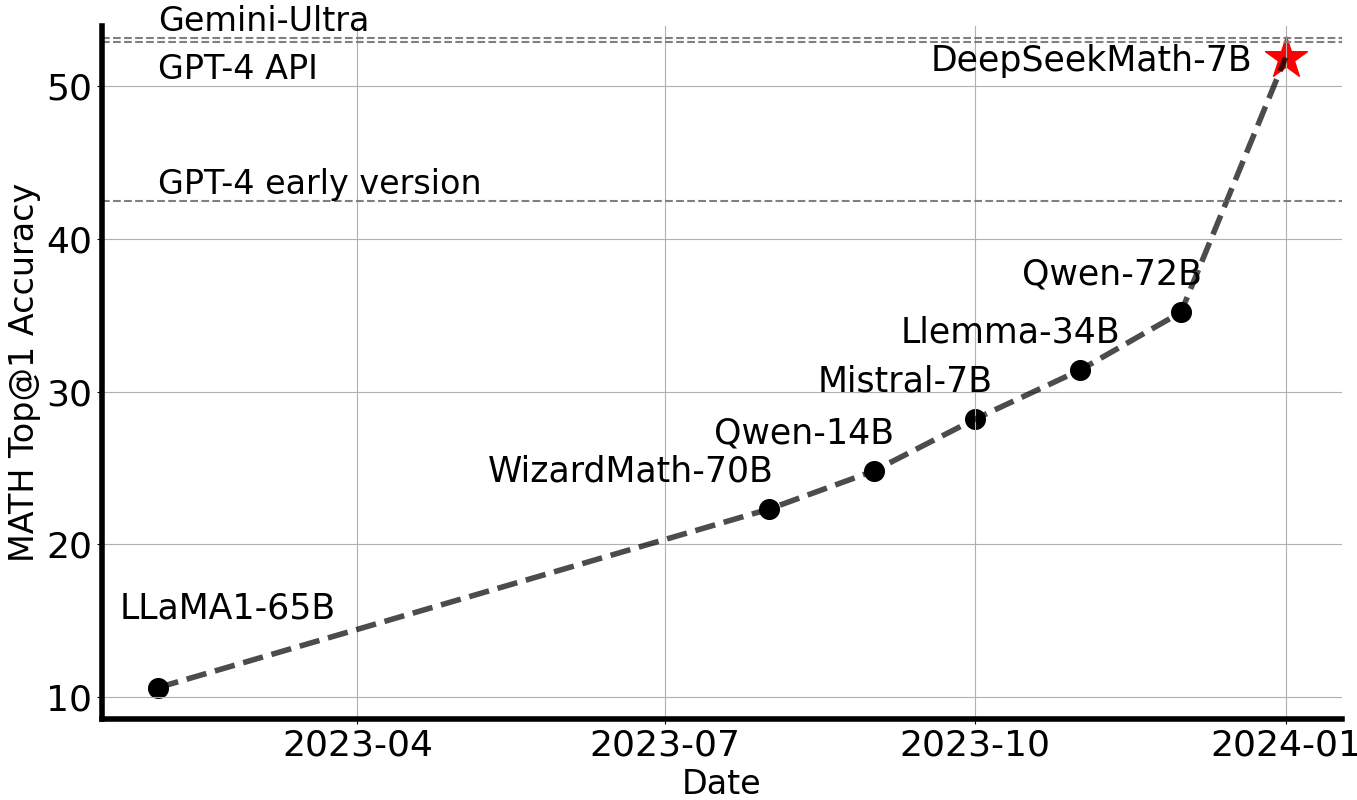

DeepSeek LLM utilizes the HuggingFace Tokenizer to implement the Byte-degree BPE algorithm, with specifically designed pre-tokenizers to ensure optimum performance. Despite being in improvement for a couple of years, DeepSeek seems to have arrived virtually overnight after the release of its R1 mannequin on Jan 20 took the AI world by storm, primarily as a result of it affords performance that competes with ChatGPT-o1 with out charging you to use it. Behind the information: deepseek ai china-R1 follows OpenAI in implementing this approach at a time when scaling laws that predict increased efficiency from bigger models and/or more training data are being questioned. DeepSeek claimed that it exceeded performance of OpenAI o1 on benchmarks akin to American Invitational Mathematics Examination (AIME) and MATH. There's one other evident pattern, the price of LLMs going down while the velocity of era going up, sustaining or slightly enhancing the performance across completely different evals. On the one hand, updating CRA, for the React crew, would imply supporting more than just an ordinary webpack "front-end only" react scaffold, since they're now neck-deep seek in pushing Server Components down everyone's gullet (I'm opinionated about this and towards it as you may inform).

DeepSeek LLM utilizes the HuggingFace Tokenizer to implement the Byte-degree BPE algorithm, with specifically designed pre-tokenizers to ensure optimum performance. Despite being in improvement for a couple of years, DeepSeek seems to have arrived virtually overnight after the release of its R1 mannequin on Jan 20 took the AI world by storm, primarily as a result of it affords performance that competes with ChatGPT-o1 with out charging you to use it. Behind the information: deepseek ai china-R1 follows OpenAI in implementing this approach at a time when scaling laws that predict increased efficiency from bigger models and/or more training data are being questioned. DeepSeek claimed that it exceeded performance of OpenAI o1 on benchmarks akin to American Invitational Mathematics Examination (AIME) and MATH. There's one other evident pattern, the price of LLMs going down while the velocity of era going up, sustaining or slightly enhancing the performance across completely different evals. On the one hand, updating CRA, for the React crew, would imply supporting more than just an ordinary webpack "front-end only" react scaffold, since they're now neck-deep seek in pushing Server Components down everyone's gullet (I'm opinionated about this and towards it as you may inform).

They recognized 25 forms of verifiable instructions and constructed round 500 prompts, with every prompt containing a number of verifiable instructions. In spite of everything, the quantity of computing power it takes to construct one spectacular mannequin and the quantity of computing power it takes to be the dominant AI mannequin supplier to billions of people worldwide are very different amounts. So with all the things I examine models, I figured if I might discover a model with a very low amount of parameters I might get one thing value using, but the thing is low parameter count leads to worse output. We launch the DeepSeek LLM 7B/67B, together with both base and chat models, to the public. With the intention to foster research, we've got made DeepSeek LLM 7B/67B Base and DeepSeek LLM 7B/67B Chat open supply for the research neighborhood. This produced the bottom mannequin. Here is how you can use the Claude-2 mannequin as a drop-in replacement for GPT fashions. CoT and test time compute have been confirmed to be the long run route of language fashions for higher or for worse. To deal with data contamination and tuning for specific testsets, we have now designed recent downside units to assess the capabilities of open-supply LLM models.

They recognized 25 forms of verifiable instructions and constructed round 500 prompts, with every prompt containing a number of verifiable instructions. In spite of everything, the quantity of computing power it takes to construct one spectacular mannequin and the quantity of computing power it takes to be the dominant AI mannequin supplier to billions of people worldwide are very different amounts. So with all the things I examine models, I figured if I might discover a model with a very low amount of parameters I might get one thing value using, but the thing is low parameter count leads to worse output. We launch the DeepSeek LLM 7B/67B, together with both base and chat models, to the public. With the intention to foster research, we've got made DeepSeek LLM 7B/67B Base and DeepSeek LLM 7B/67B Chat open supply for the research neighborhood. This produced the bottom mannequin. Here is how you can use the Claude-2 mannequin as a drop-in replacement for GPT fashions. CoT and test time compute have been confirmed to be the long run route of language fashions for higher or for worse. To deal with data contamination and tuning for specific testsets, we have now designed recent downside units to assess the capabilities of open-supply LLM models.

Yarn: Efficient context window extension of massive language fashions. Instruction-following evaluation for giant language fashions. Smoothquant: Accurate and efficient publish-coaching quantization for giant language models. FP8-LM: Training FP8 giant language fashions. AMD GPU: Enables working the DeepSeek-V3 model on AMD GPUs through SGLang in each BF16 and FP8 modes. This revelation additionally calls into question simply how a lot of a lead the US truly has in AI, regardless of repeatedly banning shipments of main-edge GPUs to China over the past year. "It’s very much an open question whether or not DeepSeek’s claims may be taken at face worth. United States’ favor. And whereas DeepSeek’s achievement does cast doubt on probably the most optimistic idea of export controls-that they could stop China from coaching any extremely succesful frontier techniques-it does nothing to undermine the more realistic idea that export controls can gradual China’s attempt to build a sturdy AI ecosystem and roll out highly effective AI programs all through its economic system and army. DeepSeek’s IP investigation providers help purchasers uncover IP leaks, swiftly identify their source, and mitigate damage. Remark: We have rectified an error from our preliminary evaluation.

We present the coaching curves in Figure 10 and display that the relative error remains beneath 0.25% with our high-precision accumulation and fantastic-grained quantization strategies. The important thing innovation in this work is the usage of a novel optimization technique called Group Relative Policy Optimization (GRPO), which is a variant of the Proximal Policy Optimization (PPO) algorithm. Obviously the last 3 steps are the place nearly all of your work will go. Unlike many American AI entrepreneurs who're from Silicon Valley, Mr Liang also has a background in finance. In knowledge science, tokens are used to characterize bits of raw data - 1 million tokens is equal to about 750,000 phrases. It has been educated from scratch on an unlimited dataset of 2 trillion tokens in each English and Chinese. DeepSeek threatens to disrupt the AI sector in an analogous trend to the way in which Chinese corporations have already upended industries akin to EVs and mining. CLUE: A chinese language understanding evaluation benchmark. Mmlu-professional: A more sturdy and challenging multi-process language understanding benchmark. DeepSeek-VL possesses common multimodal understanding capabilities, capable of processing logical diagrams, net pages, components recognition, scientific literature, pure pictures, and embodied intelligence in complex situations.

If you have any sort of inquiries pertaining to where and ways to use ديب سيك, you can call us at our internet site.

댓글목록

등록된 댓글이 없습니다.